Automated Test Harness¶

| Authors | Greg Shah Constantin Asofiei |

| Date | September 6, 2021 |

| Harness Version | 1.1 |

- Automated Test Harness

- Introduction

- Project Structure

- Installation

- License

- Prerequisites

- Building the Project

- Design

- Running the Harness

- Encoding Test Definitions

- Avoiding Test Failures

- Web Test Directive Reference

- Test Directive Reference

- acquire-mutex

- binary-file-comparison

- check-cursor-pos

- check-post-count

- check-screen-buffer

- check-variable

- clear-screen-buffer

- include

- log

- log-cursor-pos

- log-screen-buffer

- pause

- post-event-sem

- read-cursor-pos

- read-string

- release-mutex

- reset-event-sem

- rest

- run-code

- send-special-key

- send-text

- SFTP

- soap

- text-file-comparison

- variable

- wait-event-sem

- wait-for-screen-buffer

- Test Plan Definition

- Project Index Definition

- Download Directory

- Benchmark mode (Performance test support mode)

- TODOs (and Limitations)

- Credits

Introduction¶

This document provides the specification, design, implementation and usage details for both a language and a tool that provides an automated and non-interactive mechanism for testing terminal (TTY) based applications.

Main features:- Basic Usage

- Provides a language for encoding terminal application input and output processing in a manner that closely simulates how a user operates. The encoded input/output processing are known as test definitions.

- Using these encoded test definitions, it simulates user input (keyboard only, no mouse) to a terminal application (an application with a TTY or "character" interface) without a requiring an interactive user.

- Allows control over the timing of the input using methods such as pausing for a fixed interval or waiting for a specific visual response.

- Screen and report baselines (with optional exclusion regions) can be compared to screens or reports captured during testing, failing a test if any deviations are found.

- Tests

- A test is an XML file that defines the simulated input, timing, output checks and other actions which allows a given result or results to be tested on a non-interactive basis.

- Tests can be grouped into related groups called test-sets (a list of one or more tests).

- Test Plans

- A test-plan is an XML file that defines the complete operation of a testing run.

- Test plans specify a list of test-sets to execute.

- Each test-set is associated with a specific target system to be tested. That target system has a specific configuration of how to connect and the terminal configuration to use.

- A project level index file can also be specified. This project index provides configuration of pathing, named rectangles and project level resource definitions (such as named variables or semaphores).

- Sessions

- A session is a thread which has a terminal-based connection to a specific target system under test.

- Each session can be running a single test at a time.

- Multiple sessions can be run simultaneously.

- By default each test-set has 1 thread (and thus 1 session). The number of threads for a given test-set can be explicitly configured as any positive number.

- Job Queues

- A job queue is a list of tests which should be run on a specific target system. Each test-set has one and only one job queue. The tests for a test-set are the contents of the job queue.

- Multiple job queues can be in existence simultaneously, but only if you have multiple test-sets. You always have the same number of job queues as you have of test-sets.

- Each session is assigned to one and only one job queue.

- When needing a test to run, the session will read a test definition from its associated job queue.

- Multiple sessions can be associated with the same job queue by specifying a thread count > 1 for the given test-set.

- Dependencies

- Test Sets can be defined with one of the following dependency types:

- pre-condition (they execute first before other test sets and any failures abort the test plan run)

- none (they execute simultaneous with all other test sets)

- post-condition (they execute after all other test sets are complete)

- Tests can be specified to be dependent upon other named tests (or groups of tests) which constrains execution in the following ways:

- pre-condition (the test is executed first and will be executed on each session in the test-set)

- none (no constraints)

- critical path (execution of this test will stop any further tests from being dequeued/processed until this test has completed processing)

- concurrent (the test will be started at the same time as other tests marked concurrent and assigned to the same group name)

- sequential (the test will execute on the same session as others marked sequential and assigned to the same group name and the execution will be forced to be in the same order as encoded in the test-set)

- specific (this test will not run until the specifically named test has successfully completed; if the test upon which this one is dependent fails, then this test will be marked as a failed dependency and will not run)

- post-condition (the test is executed last and will be executed on each session in the test-set)

- Test Sets can be defined with one of the following dependency types:

- Failure Modes - tests can be marked to have the following behaviors when they fail:

- ignore (just mark this test as failed and continue processing other tests)

- abort thread (mark this test as failed and exit the session thread such that no other tests will execute on this thread)

- backout (mark this test as failed and execute a specified set of backout steps to attempt to leave the terminal session in a state where it is safe to execute further tests)

- Summary and detailed results reports are created for each test run. These reports are created as a set of hyperlinked HTML pages.

- Connections to back end systems can be made via SSH2 and SFTP.

- Terminal types supported are vt220 and vt320.

- Written in 100% Java.

In this document the "automated TTY test harness" is also referred to as the "harness".

Project Structure¶

The following is a list of the key files and directories in the project:

| Relative Path | Purpose |

|---|---|

| build/classes/ | Target location where compiled classes will be stored. |

| build/lib/ | Target location for the generated jar file and any dependent jar files (for jsch and jta). |

| build.xml | ANT build script. |

| diagrams/design.odp | High level design diagrams in OpenOffice.org format. |

| dist/ | Target location for generated JavaDoc. |

| docs/ | Non-JavaDoc documentation. |

| lib/ | Location to store dependent jar files. |

| license.txt | GPL v3 license |

| manifest/harness.mf | Manifest file for the harness.jar file. |

| other/ | Necessary source code patches for related projects. |

| src/com/goldencode/compile/ | Dynamic (in-memory, runtime) compilation helpers. |

| src/com/goldencode/harness/ | Main directory for the Automated TTY Harness Test which includes core base classes, interfaces, enums and the command line driver itself. |

| src/com/goldencode/harness/terminal/ | Terminal module that hides the implementation and usage of the terminal emulation layer. |

| src/com/goldencode/harness/test/ | Implementation of the tests and test directives. |

| src/com/goldencode/harness/transport/ | Networking module that implements a generic interface as well as the SSH2 session. |

| src/com/goldencode/html | HTML generation helpers. |

| src/com/goldencode/io/ | Stream and file system helper classes. |

| src/com/goldencode/lang/ | Runtime language processing. |

| src/com/goldencode/util | Miscellaneous utilities such as logging. |

Installation¶

For development and/or build purposes, the project can be installed by obtaining the project code in a zip or tarball form and unzipping/extracting the files into the file system.

For runtime usage, the only requirement is to obtain the main jar file (harness.jar) and any of the dependent jar files (see below). There are no other installation dependencies. The easiest way to handle this is to copy all of the jars in the build/lib/ directory of a development version of the project. Once available on a system, the harness tools can be run so long as the CLASSPATH is properly set. Note that the harness.jar manifest file will automatically resolve dependencies upon the other jar files so long as those files can be found in the same directory as the harness.jar.

License¶

The harness code is released using the Affero GPL v3 (AGPL).

Usage of the harness via the included user interfaces (e.g. command line driver programs) does not require the release of any technology by the user under the AGPL.

Programmatic usage of the harness code (e.g. creating your own user interface and calling harness Java classes to use their functionality) MAY require that the calling code be released using the same license. The reason for this is that using a Java class in this way is considered linking with the harness code, which creates a single work. By the terms of the AGPL, if such linked code is distributed outside of your organization or is accessed via a network , then all of the source code for that larger work (including the calling code) MUST also be released under the AGPL.

The test definitions or other data inputs to the harness are NEVER required to be released or licensed under the AGPL.

For more details, see License.

Prerequisites¶

TODO: Link to the original projects and their licenses.

TODO: Provide our own hosted versions of the source for these projects so that it is always available.

The following projects are required for this harness to compile and operate:

| Project | Version | Description | Compile/Use | License | Installation |

|---|---|---|---|---|---|

| ant | 1.6.1 or later | Apache ANT build tool for Java | Needed only for compilation. | Apache License v2.0 | Normally this can be installed using a platform-specific install tool. In Ubuntu, this is found in the package management repositories under the name "ant". |

| Java | 6.0 or later | Java Virtual Machine (JVM) and J2SE class libraries | A full Java Development Kit (JDK) is required for compilation. Only a Java runtime is needed for usage. |

? | Platform-specific Sun J2SE download and installer OR via package management system. In Ubuntu, this is found in the package management repositories under the name "sun-java6-jdk" (for the full Java Development Kit). |

| jsh | 0.1.41 or later With Java 7, 0.1.41 randomly generates ssh connection errors; this problem was fixed in 0.1.50 RC1. Use 0.1.50 RC1 or a later version on systems running Java 7. |

Java Secure Channel (provides SSH2 and SCP protocol support) | Needed for both compilation and use. | BSD style | Download the binary distribution (a single jar file), place it in the lib/ directory and edit the manifest/harness.mf to change the file name if needed. |

| jta | 2.6 with a custom patch | Java vt220 and vt320 Terminal Support | Needed for both compilation and use. | GPL v2 or later | Download the source distribution (jta26-src.jar) and unzip it. Apply the provided patches using the following commands (where jta26/ is the directory containing the unzipped JTA source code and harness/ is the directory containing the Harness project's source code): patch jta26/de/mud/terminal/vt320.java harness/other/jta26_screen_corruption_fix_20090521.patch patch jta26/de/mud/terminal/vt320.java harness/other/jta26_screen_corruption_fix_20090717.patch Then build a patched jar file using this command: cd jta26 mkdir jar ant dist The build does not automatically create the output directory for the jars. But you only have to create it once. Copy the jta26/jar/jta26.jar into the lib/ directory and edit the manifest/harness.mf to change the file name if needed. |

For all of the above listed dependencies, the code is NOT included with this project. You MUST "install" the code (per the instructions in the table above) BEFORE trying to compile or run the harness.

Building the Project¶

To build the project, change directory to the top-level project directory. This is the same directory in which the file build.xml resides. From that location, run the following:

ant all

The proper directory structure will be created if needed or if already existing, any previous results will be deleted. Then the Java source code will be compiled to the binary class file format and those classes will be turned into the build/lib/harness.jar file. The dependent jars will be copied from lib/ into the build/lib/ directory. Finally, the JavaDoc will be created in the dist/ directory.

Possible build targets (used with the command ant <target>):

- all

- clean

- prepare

- compile

- jar

- javadoc

Design¶

The harness project can be thought of in 2 parts:

- a language (in which programs can be written) to automate the input and output to a terminal (TTY) application

- a runtime environment that allows the execution of the harness programs and the reporting upon the success/failure and details of that execution run

The objective of the harness is to deterministically and reliably encode and execute testing of TTY applications on an automated basis. The complexity of this is easy to underestimate due to a wide variety of problems must be planned for, but which are naturally and easily handled when a user is sitting in front of the application. Please see the Avoiding Test Failures for details.

Each program in the harness language corresponds to a single test. A test is an ordered list of directives (i.e. test steps) which are executed sequentially. During execution, each step's results (success/failure, elapsed time and other details of the operation) are stored. The first step that fails causes the rest of the test to abort (subsequent steps do not execute). These programs are encoded in XML files known as "test definitions". See Encoding Test Definitions for more details. For details on all possible test directives, please see the Test Directive Reference.

Automating a single test is useful, but it is also important to allow the automation of arbitrarily large numbers of tests. To that end the harness language provides a mechanism for grouping tests called a "test set". Test sets are lists of tests which are to be processed against the same back-end target system AND which are processed by a common thread pool. The execution of a complete testing run can include one or more test sets and the configuration of this is encoded in an XML file known as a "test plan". See Test Plan Definition for more details.

The test plan is executed in 3 phases: pre-conditions, regular processing and post-conditions. Each test set can be assigned a "dependency" type from the following:

- PRE_CONDITION

- NONE

- POST_CONDITION

The 1st phase of execution will simultaneously execute all test sets marked as PRE_CONDITION dependencies. When all of these have completed processing, if any test sets have a FAILED status, then the remainder of the test plan will abort. This allows any environment preparation to be completed before the core testing occurs.

If the test plan does not abort, the 2nd phase of execution will simultaneously process all test sets that have no dependency (dependency type set to NONE which is the default).

No matter what the result of the 2nd phase, if the 2nd phase runs, then the 3rd phase will run as soon as the 2nd phase is complete. The 3rd phase executes all POST_CONDITION test sets simultaneously. This is a way to ensure that cleanup processing happens at the end of the test plan run.

The harness language also provides for configuration values that are global to an entire "project". These values are used for finding files (search paths) and for defining shared resources for all tests. This configuration is known as a "project index" and it is encoded in an XML file. See Project Index Definition for more details.

Given an encoded test plan, project index (which is optional) and the referenced test files, the user can launch a test run using the command line Java program called com.goldencode.harness.Harness. This program will do the following:

- Read the test plan which loads each test set, the list of tests and all configuration data. If valid, these definitions are all created as in-memory objects that are prepared for execution.

- If there is any missing information in regards to login information (userids and passwords can be encoded in the test plan but if they don't exist, the command line harness program will prompt the user). This allows different users to launch testing without editing definition files and without encoding sensitive data (like passwords) into files.

- The loaded test plan is then executed. The harness waits while test execution is done on separate threads. All the results of that execution run are stored and when all the processing is complete, the harness continues processing.

- A report generator is used to write the test results into a hyperlinked set of HTML documents.

For details on the command line launcher, see Running the Harness.

Besides the harness command line launcher, there is much more runtime support needed for the execution of a test plan. The following is a high level description of the components:

- Reportable - An interface that enables delegation of report generation to another class by providing access to report data that is specific to the instance. Graphs of objects that implement Reportable can be walked and inspected to obtain test results. These results can then be written into hyperlinked HTML report documents. This interface provides the report generator the ability to inspect start time, end time, elapsed time, name, description, test status, failure reasons and other critical reporting data.

- Report - The abstract base class which provides the core implementation of Reportable for test plans, test sets, tests and test steps (directives). These various entities are linked together in object graphs which the resulting HTML documents mirror. For example, this allows the reader of the reports to see a report on the test plan results and then link from there to each of the test set result documents. This base class centralizes much of the work of storing and retrieving the report details.

- Testable - A sub-interface of Reportable which enables delegating the execution of a testable activity. It has a single void execute(Terminal term) method which is used to run the testing of that object.

- Test - The base class which implements Testable and extends Report. This is a container for an ordered list of 1 or more Testable test steps that must be executed. When the Test.execute() is called, each of the Testable steps are executed in order. The report details of each step execution (timing, status, failure reasons...) are stored in each step. Likewise, the aggregated report details of the Test itself is stored in the Test object. This all works because the Test and all of the steps are Testable (which are Reportable).

- TestFactory - This is the class that reads the XML test definition and instantiates a Test (and all the contained Testable directives).

- TestSet - This is the class that contains the list of tests to be executed, manages the creation of the job queue, creates the threads that process the tests and then waits for these threads to complete. There must be at least 1 test set in a test plan. This is the object loaded from each test set portion of the test plan.

- Driver - This is a subclass of the Java Thread class which implements logic of worker threads in a TestSet thread pool. Each such thread is also known as a "session". Instances of this class are created by the TestSet class (1 for each thread in the thread pool). The thread then establishes its own Connection to the target system and creates a Terminal instance that uses that network transport to send input to the target system and to read the output sent back from the target system. Then, so long as there are more Test instances in the job queue, the Driver will get the next Test from the queue and then it will call Test.execute() (passing the thread-specific Terminal instance to the Testable). After Test.execute() returns (the test processing is complete for that test), the Driver handles any failure mode processing and then tries to get the next test (if the thread isn't marked to abort). When no more tests are available and the Driver is done processing its current test, it will notify the TestSet that it is done and it will exit. Notifications between the Driver theads and the TestSet are handled using a CountDownLatch.

- Thread Pool - Each TestSet has a pool of Driver threads which process the Test instances. These threads dequeue tests from a shared queue until there are no additional tests to process for this TestSet. Each thread pool is dedicated to processing tests only for a single TestSet.

- JobQueue - This manages the queuing of tests for it's TestSet. There is always only one instance of this class per TestSet. All the threads in the thread pool for this TestSet will use the JobQueue.dequeue() method to obtain the next Test to execute. This means that this instance is shared between all Driver threads in the same thread pool (i.e. in the same TestSet). Hidden inside this class is the usage of SubQueue elements. SubQueue instances implement a range of dependency types that allow Test processing to be constrained in various ways.

- Terminal - This represents a set of input and output primitives used for communicating with the back-end target system. Keys can be sent and the current screen can be read. Each thread Driver has its own instance (which means that each Driver thread is like a separate logical user of the target system). Many test directives use the Terminal instance to handle I/O with the target system or to implement synchronizing facilities (e.g. to block until certain results are received back from the server in response to previous input that was sent). The Terminal class uses facilities in the JTA project to provide the low level vt220 and vt320 emulation. The Terminal implements a non-visual terminal emulation client and hides the complexity of programming the JTA backing code. The primitive operations provided to the caller are independent of the terminal emulation type.

- Connection - An interface for providing a generic communications session with a back-end target system. SSH2Session is the only current implementation of this interface. This API allows the connection to be started, stopped and to obtain the associated InputStream and OutputStream for that connection. The SSH2Session uses the JSch project for this SSH2 support and it hides the complexity of those interfaces.

- TestPlan - This is the class that contains and manages the execution of multiple TestSet instances. It causes each TestSet to begin execution and it waits for all the TestSet instances to complete, using a CountDownLatch. The TestPlan is the in-memory executable representation of the XML test plan.

- TestPlanFactory - This is the class that reads the XML test plan definition and instantiates a TestPlan (and all the contained TestSet instances). During the loading of each TestSet, the TestFactory is called to load all Test instances into each TestSet. Thus a single call to load a test plan results in a complete loading of the entire graph of testing objects.

- ProjectIndex - An optional module that facilitates the use of project-level resources such as named rectangles and search paths for finding files. This is only used during the loading process of converting the XML definitions into the in-memory executable form. This class allows the language syntax to be shortened. For example, files can be referenced by a partial path and found during loading via a search algorithm.

- ProjectIndexLoader - This class reads the project index XML file and loads its contents into the ProjectIndex instance.

- ReportGenerator - This class is used by the Harness to create all of the HTML reports after a test run completes.

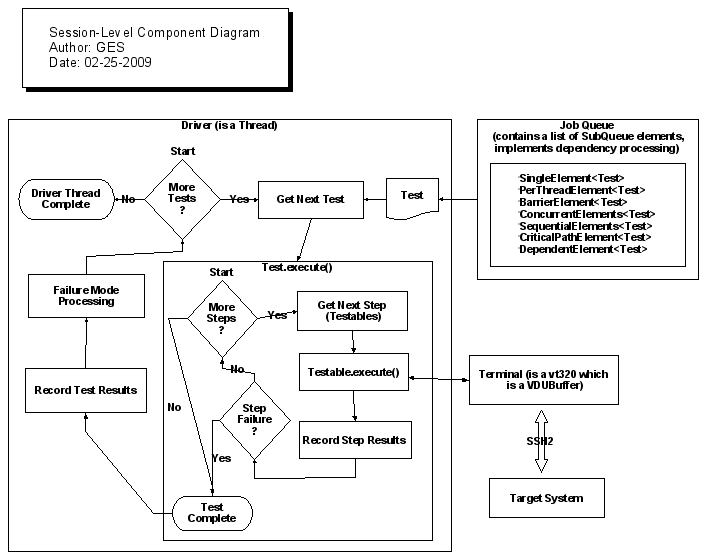

The following diagram depicts how a single session (thread) processes:

The above diagram shows the details of a single thread (potentially one of many in a test set's thread pool). It shows how the Driver processing loop works, how the Test.execute() method works and how the Terminal instance is used to communicate via SSH2 to the target system.

The Terminal object provides the low level primitives for programming/automating the interaction with the back-end target system. Here are the details of how the Terminal object works:

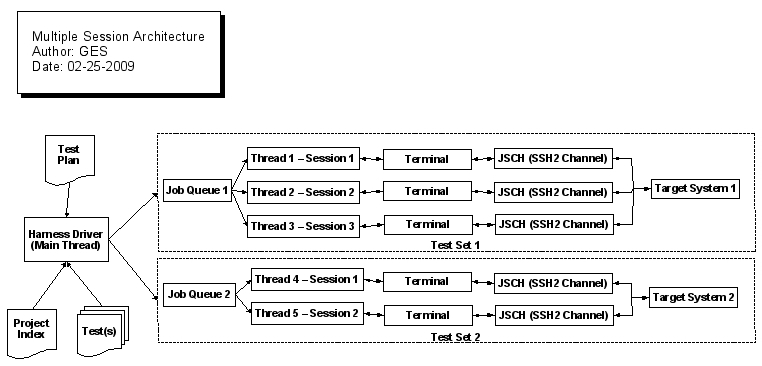

The following diagram demonstrates the overall Harness process, the XML input files that define the testing run and the structure of how test sets, job queues, sessions and target systems all interact.

Running the Harness¶

To run the harness, first change your current directory to the directory in which the test plan exists. This is considered the "root" directory for the associated testing project.

From there, run this command (this is valid for Linux or UNIX):

java -cp ../path/to/jarfiles/harness.jar:. com.goldencode.harness.Harness my_test_plan.xml

On Windows this might be:

java -cp ..\path\to\jarfiles\harness.jar;. com.goldencode.harness.Harness my_test_plan.xml

If there are any problems loading the tests, an error will be displayed and the harness will exit. If the test details can all be loaded properly, the harness will prompt you for userid and password if needed. Then it will execute the testing and exit.

You will then need to use your browser to look at the HTML reports for that run. This will be in the directory specified in the output-directory element of the test plan or if that was not specified, the reports will be found in a ./results/ sub-directory under the current directory.

Full syntax for the command line driver:

java com.goldencode.harness.Harness [options] <test_plan_filename>

Options:

| Option | Description |

|---|---|

| -? | display a help screen |

| -d | enable verbose output |

| -d -d | enable even more verbose output |

| -l <log_level> | set log level: WARN,INFO,FINE,FINER |

| -g <float> | allows for multiplying all timeouts in test scenario by a factor |

| -q <integer> | maximum parallel TestSet, set 1 for sequential |

| -c | enable dumping reports on CTRL-C |

| -x | abort anytime when RC fails |

| -m | enable performance test mode |

| -s <skipListFile> | allow providing a list of tests to be skipped |

| -f <test_list> | comma separated names of tests to be included in the whitelist |

| -b <path_list> | specify a default set of baseline search paths; <path_list> is a list of one or more paths separated by the platform specific path separator character (e.g. on UNIX orLinux this would be the colon ':') |

| -e | force separate host/port/userid/password prompts for each target system (the default is to only prompt once and to use the same responses for each of the target definitions which don't have the values defined |

| -t <path_list> | specify a default set of test/include search paths; <path_list> has the same format as the -b option |

| -v <var=value> | specify values for variables which otherwise would need to be read from the console |

| -h <host> | specify the host to connect |

| -p <port> | specify the port to connect |

| -u <userid> | specify the userid used to connect |

| -w <password> | specify the password used to connect. WARNING! Setting the password in the command line will expose it in the process list. Use this option with care, as it can pose security problems. |

| -n <endpoint> | the override endpoint for web tests |

| -oauth1 <param1=value1 param2=value2 ... paramN=valueN> | list of override parameters for oauth1 authorization (web tests only) |

| -o <var1=value1 var2=value2 ... varN=valueN | list of override variable initialization values |

Parameters:

| Parameter | Description |

|---|---|

| test_plan_filename | specific test plan to run (this is the relative or absolute filename of the XML test plan definition) |

See integer value obtained from ExitCode.getCode() will be used in the call to System.exit().

Encoding Test Definitions¶

Each test is a set of directives contained in a valid XML file. Here is the overall structure:

<?xml version="1.0"?> <test name="example" description="This is an example test description." > <!-- test directives go here --> </test>

The following attributes can be used in the <test> element:

| Name | Description | Required |

|---|---|---|

| backout | The name of a an XML test definition file that contains the steps to execute to backout (or cleanup) any processing for a failing test. The point of these steps is to leave the terminal application in a state where subsequent tests can process properly. This is only used with the failure mode BACKOUT. |

N (only required for failure mode BACKOUT) |

| dependency | One of the following (values are case-insensitive) dependency types: • NONE • PRE_CONDITION • CRITICAL_PATH • CONCURRENT • SEQUENTIAL • SPECIFIC • POST_CONDITION Each dependency type imposes specific constraints on how and/or when the test can execute. A NONE dependency means that the test runs in the order determined by its order in the test set AND by any constraints forced upon all tests by other tests with dependencies. In fact, all other dependency types can cause tests to execute in a different order than listed in the test set. Absent any influence of other dependencies, the NONE dependency does not cause any further modifications to test execution. A PRE_CONDITION is a test that must successfully run on every thread (session) in the test set. A duplicate of the test will be made for each thread in the test-set's thread pool. All pre-condition tests will be run first (in the relative order as compared with other post condition tests in the test-set definition). A test that is not a pre-condition can never run before a test that is a pre-condition. Since such tests run once on each of the threads in the thread pool AND before any other processing, pre-conditions are ideal for code that executes a login to an application, such that all subsequent tests don't have to login. A CRITICAL_PATH dependency generally causes all tests following it in definition order to wait for execution until the CRITICAL_PATH test is complete. The exceptions to this rule: 1. PRE_CONDITIONS execute first regardless of order. 2. CONCURRENT and SEQUENTIAL dependencies are "group" oriented. In other words, they have more than 1 test that is jointly controlled as a member of a named group. If one of the tests in a group has been defined before the CRITICAL_PATH test, then all of the other tests in that group will similarly be considered defined before the CRITICAL_PATH test. A CONCURRENT dependency forces all of the tests in that same group to start execution at the same time. This is a group oriented dependency and it requires a group attribute specifying an arbitrary group name to which to belong. No matter where the subsequent CONCURRENT tests are defined in the list, they will all be executed at the same time as the first one is executed, although multiple Driver threads will likely be blocked inside the JobQueue.dequeue() method while they wait for enough threads to be ready such that all the concurrent tests can start at the same time. There is no limit on when the tests end, this is a start constraint only. Please note that a deadlock can occur if there are more concurrent tests in the same group than threads (in that test set) to execute them. Even if the test set defines enough threads, tests that fail with a failure mode of ABORT_THREAD, will reduce the thread pool size dynamically which may cause there to be too few threads to allow the concurrent tests to execute. When there are not enough threads, a deadlock will occur and the test session will have to be aborted. A SEQUENTIAL dependency forces all of the tests in that same group to execute sequentially on the same exact thread. This is a group oriented dependency and it requires a group attribute specifying an arbitrary group name to which to belong. No matter where the subsequent SEQUENTIAL tests are defined in the list, they will all be executed starting at the same point as the first one in the list (it can make it look like these tests "jumped ahead" in line), however since the thread can only execute one at a time, each test in the same SEQUENTIAL group will be executed in order of definition on the same thread, until all of them are complete and then other tests can be dequeued and executed on that thread. If one of the sequential tests in the same group fails, then none of the following tests will execute and all of those following tests will be marked with a status of FAILED_DEPENDENCY. A SPECIFIC dependency forces the test to wait until a named other test completes successfully. The test upon which another test depends is specified usign the referent attribute. Execution of the dependent test is deferred until the referent test successfully completes. Since many tests can all refer to the same referent, and other tests can refer to the dependent tests (and so on), a tree of dependencies can be created using this dependency type. With each node in the tree that sucessfully completes. all dependent nodes then are freed to execute (from there on independently, possibly causing there to be independent sub-trees of dependencies). Any failure by a referent will cause the entire tree of dependencies (from that referent onward) to be marked as FAILED_DEPENDENCY and those so marked will not be executed. Please note that a SPECIFIC dependency does not force dependent tests to run on the same thread. Once the referent test is complete, the dependent test(s) will run on the first available thread from the thread pool. A POST_CONDITION dependency is a test that must successfully run on every thread (session) in the test set. A duplicate of the test will be made for each thread in the test-set's thread pool. All post-condition tests will be run last (in the relative order as compared with other post condition tests in the test-set definition). A test that is not a post-condition can never run after a test that is a post-condition. Post conditions will not run until there are no other tests to dequeue other than other post conditions. Since such tests run once on each of the threads in the thread pool AND at the end of all other processing, post-conditions are ideal for code that executes a logoff from an application. |

N (defaults to NONE) |

| description | The verbose description of the test. This can be any text that is useful for describing the test. | N |

| failure-mode | This tells the harness how to handle a test failure (which is detected as soon as any step fails). It must be one of the following: • IGNORE • ABORT_THREAD • BACKOUT An IGNORE failure mode marks the test as failed and has no effect on any subsequent test processing. All subsequent tests continue to process. An ABORT_THREAD failure mode marks the test as failed and then causes the current Driver thread to exit. As long as there are other threads remaining in the thread group, test execution will continue. However, there can be two problems: • If the last thread aborts, then the test set will end processing at that moment and any tests remaining in the queue will not be run. They will be marked NOT_RUN. • If there is a CONCURRENT dependency and the number of tests in the concurrent group is greater than the number of remaining threads, then test processing will deadlock waiting for enough threads to be available. A BACKOUT failure mode marks the test as failed and then runs the steps read from the XML test definition file specified by the backout attribute. |

N (defaults to IGNORE) |

| group | The name of the dependency group to which this test belongs. This group name can be any unique string that is shared between multiple tests with the same dependency type. This is only used for dependency types CONCURRENT and SEQUENTIAL. |

N (this is only required when the dependency is CONCURRENT or SEQUENTIAL) |

| name | The short name for the test. There are no spaces or special characters used in this name. It will be used for filenames and HTML links. | Y |

| referent | The filename of a test on which to be dependent. Only used for the SPECIFIC dependency type. The filename must be specified exactly the same (case and text) as was the filename attribute of the test that is being specified. In addition, that referent test must already have been defined. Lastly, since it is possible for the same filename to be defined multiple times, the referent can only refer to the most recent test definition that used that filename. | N (this is only required when the dependency is SPECIFIC) |

| repeat | A positive integer specifying the number of duplicate instances of this test to include in the test set. For example. if "5" is specified, then the test will be executed 5 times (and each test will be treated as a separate and independent test, potentially running on a separate thread). This is a load-time construct. The loading process will duplicate the test in the in-memory test set definition and then each test instance (though loaded from the same definition) will be treated separately at runtime. |

N (defaults to 1) |

| timeout | A 32-bit integer specifying the maximum number of milliseconds to allow for test execution before the test fails. Note that the test will not be interrupted at that time. Instead, the total elapsed time for the test is compared to this value at the end of test execution and if this elapsed time is greater than this timeout value, then the test will be set into a FAILED status, even if all of the test steps PASSED. This is a way to enforce that a successful functional run of the test must complete in within this timeout value in milliseconds. | N |

A directive is a single step of the test. These steps instruct the harness to execute some logic such as sending keystrokes to the target system or checking that the current terminal screen matches a captured baseline screen.

To encode a directive, it is created as a child node of the <test> element. The list of directives form the steps for the test. When the test is being run, each directive will be executed in its order of appearance in the file.

There is no parallelism or threading provided for a single test, however it is possible to execute and coordinate multiple tests running in different sessions (threads), such that a multi-user scenario can be simulated.

Test directives fall into the following categories:

- Terminal Input

- Send Keystrokes

- send-text

- send-special-key

- Cursor Position

- check-cursor-pos

- read-cursor-pos

- Send Keystrokes

- Output Checking

- Screens

- check-screen-buffer

- clear-screen-buffer

- read-string

- wait-for-screen-buffer

- Files

- binary-file-comparison

- text-file-comparison

- Screens

- Timing

- pause

- Logging

- log

- log-cursor-pos

- log-screen-buffer

- Variable Definition and Processing

- check-variable

- variable

- Running User-Defined Logic

- run-code

- Include Processing

- include

- File System

- SFTP

- cd

- get

- lcd

- lpwd

- ls

- mkdir

- put

- pwd

- rename

- rm

- rmdir

- symlink

- SFTP

- Thread Coordination

- Mutex Semaphores

- acquire-mutex

- release-mutex

- Event Semaphores

- check-post-count

- post-event-sem

- reset-event-sem

- wait-event-sem

- Mutex Semaphores

Please see the Test Directive Reference for details of the syntax for encoding specific directives.

Avoiding Test Failures¶

Without careful planning, it is easy for tests to be defined such that they are susceptible to 2 kinds of failures:

- false positives (tests which pass when they should really fail)

- false negatives (tests which fail when they should really pass)

The problems can be avoided with proper encoding of the test. It is important to:

- maximize data stability

- avoid certain bad practices

- frequently synchronize execution of the test with the current state of the server

Data stability is the concept that each time a test runs, the data is the same (or as close as possible) to the state of the state at the time the tests were originally encoded. This maximizes the amount of input data that can be hard coded into test definitions and greatly simplifies the coding of tests. An example of how to ensure data stability is to restore the application's database to a specific/known backup before running testing.

Bad practices to avoid:

- When test execution is at the login shell of the target system, DO NOT execute a command that would cause the shell to exit (this will disconnect the session with the back-end system and no further steps or other tests can run in that session/thread). For example, on Linux and most UNIX systems if you type "exit" and press the ENTER key, the login shell (and your communications session) will end.

- Screen buffer checks are a useful thing to separate into an include file so that the screen check can be implemented in many tests with very little code. However, it is important that the screen captures are EITHER not specific to the path by which the application took to get to the screen OR those portions of the screen that vary based on the previous navigation are excluded. Special attention to this prior state needs to be taken to avoid unexpected failures. The good news is that these are usually found at development time rather (when the new usage of the screen check is first tested) than at the time the official testing is run.

- It is easy to encode a test which is dependent upon the state of the file system, security permissions/rights, the specific user's configured environment or other application state that is variable over time, user or other criteria. If an application changes its behavior based on state that is variable, this state must be controlled carefully before testing is run to avoid failures. For example, a reporting application (which writes a report in a text file in response to some user input) may work one way the first time the report is generated and may add additional prompts in subsequent runs. In this example, if the report file already exists (due to a prior run) the application may prompt the user to confirm that it should overwrite the report file. This variablity of the applications behavior must be made deterministic, through careful control over the environment and state of the system and application.

- Many applications will have different behavior (and appearance) on the first time a particular screen or function is accessed than in subsequent times. This means that some screen baselines cannot/should not be re-used. Or if reuse is important, then subsequent usage may need additional exclusion regions to allow the screens to match. Such failures are usually found and fixed during development.

Test step synchronization is the process by which the execution of subsequent test directives (test steps) is not allowed to continue until it is confirmed that the server has fully and successfully responded to all prior input. For example, many applications provide some mechanism of type-ahead. However, some applications don't provide type-ahead and even some applications that do support type-ahead, have limits on how type-ahead can be used. This inherently limits the speed with which key input can be sent to the server because the harness can easily overrun the server (sending keys that will be discarded). In addition, since the server's response time is variable (and under load or other conditions it may vary widely), most applications have significant limitations on how much can be typed ahead before the user must wait for the server to catch up.

In interactive usage, this is part of the end-user's job. They naturally have to wait at specific points for the server to catch up before proceeding. This is absolutely essential to do with great frequency in automating testing. If this is not done, the test execution will appear unreliable or will fail based on timing of responses from the server. The harness includes a variety of synchronization mechanisms that can be used to fully avoid failures by frequent synchronization.

Synchronization tools:

- check-screen-buffer - This is the best, most useful synchronization tool. When the wait option is used, it allows the harness to pause test execution until a specific screen or partial screen is matched coming back from the server. There is a timeout such that the test fails if a response has not be received at that time.

- Keystroke response draining - the send-text and send-special-key directives support a draining option. This tells the harness to block further execution until some amount of output has been read back from the server AND for some period of time there has been no more output sent from the server. This quiecence is detected and excution resumes. This also is controllable via a timeout to allow failures to be detected. Note that this is dependent on knowing the amount of output to expect back, which is not the same as the number of characters that change on the screen. This is measured in raw bytes sent by the server, which includes escape sequences and terminal processing (attributes, cursor movements...) in addition the the modified text content. This is useful for certain cases and can be used reliably, but it is not a powerful as the check-screen-buffer.

- wait-for-screen-buffer - This can be used to detect that any output has been recieved (when combined with a previous clear-screen-buffer) OR it can be used to wait for a specific string of text (not a multiple-row rectangle, but a section of text on one row) appears anywhere on the screen. This is useful in cases where there is some known text but its location on the screen cannot be predetermined (possibly due to scrolling of the screen or unknown prior state).

- Inter-key delay - the send-text directive can have an inter-key pause specified to slow down the pace of sending keys. This can have some use but it is quite weak and should be avoided if possible in preference to the mechanisms above. In particular, it doesn't handle variability at all without significantly degrading the time it takes to execute a test (by specifying a very large interkey delay).

- pause - This is a fixed (hard coded) pause for a specific amount of time. It is very crude and has the same limitations as the interkey delay. Avoid it as much as possible.

Web Test Directive Reference¶

The supported web services are REST and SOAP. These can be tested via the rest and soap test directive, which have the exact same syntax and definition - the only differences exist at runtime, how the request and response payloads are interpreted and some other differences.

The rest or soap test directive have this structure:

<soap|rest service="optional, vars-aware" method="post|get|put|...[optional,default GET/REST or POST/SOAP]">

<authorization type="oauth1"> <!-- optional -->

<parameter name="oauth_consumer_key" value="optional"/>

<parameter name="oauth_consumer_secret" value="optional"/>

<parameter name="oauth_token" value="optional"/>

<parameter name="oauth_token_secret" value="optional"/>

<parameter name="realm" value="optional"/>

<parameter name="oauth_callback" value="optional"/>

<parameter name="oauth_verifier" value="optional"/>

</authorization>

<query name="vars-aware" value="vars-aware" /> <!-- optional -->

<header name="vars-aware" value="vars-aware" /> <!-- optional -->

<body filename="path/to/request/body.xml|json"> <!-- optional -->

<![CDATA[

JSON or SOAP body, can't be both filename and cdata

]]>

<replace path="" variable="" value="" /> <!-- optional -->

</body>

<response filename="path/to/response/body.xml|json"> <!-- optional -->

<![CDATA[

JSON or SOAP expected body, can't be both filename and cdata

]]>

<status code="optional" reason="optional" /> <!-- optional -->

<header name="" value="optional" save="optional" /> <!-- optional -->

<body save="optional"> <!-- optional -->

<exclude path="" /> <!-- optional -->

<include path="" /> <!-- optional -->

</body>

<exists path="" save="optional" /> <!-- optional -->

<regex path="" value="" save="optional" /> <!-- optional -->

<exact path="" value="" save="optional" /> <!-- optional -->

</response>

</soap|rest>

Each configuration or attribute will be described below, but in the template above the following can be interpreted as:

soap|restmeans this is either thesoaporrestdirective.optionalmeans either the attribute or the entire node is optional.vars-awaremeans an attribute will have all variable references replaced with their current, runtime value, before being used to build the request. To use this variable substitution, reference the variable in the attribute likeendpoint="${REST_ENDPOINT}/path/to/service". This means the variable must be specified in uppercase always (regardless how it was defined), enclosed in curly braces and starting with the $ sign.

rest or soap. For these, the service and method attributes can be specified, where:

service, if missing, defaults to theendpointattribute from the web target configuration. Otherwise, if after replacing all variable references the service doesn't start withhttp://orhttps://, the default endpoint will be pre-pended.method, which if missing defaults toGETforrestandPOSTforsoap.

authorization, optional. Onlyoauth1type can be specified at this time. Theparameterchild node will specify anoauth1setting. Ifvalueis missing or empty, the parameter will be ignored.query, zero or more entries with the query parameters set at the http request. Both thenameandvaluewill have all variable references processed.header, zero or more entries with the request headers set at the http request. TheContent-typeheader will always be set and will default totext/xmlfor SOAP and toapplication/jsonfor REST. Both thenameandvaluewill have all variable references processed.body, optional, with the request body. This can be specified either viafilenameor the CDATA, but not both. Thereplacetag (zero or more entries) can be used to replace either aXPathorJsonPath, if thepathattribute is set or a variable reference, if thevariableis set. You can't specify bothvariableandpath. The target will be replaced with the specifiedvalue.response, optional, with the expected response. This can be specified either viafilenameor the CDATA, but not both. If noresponsetag exists, then the test is considered passed. Otherwise, the test can check for:status, a single entry with the response status code and reason match. Both are optional.header, zero or more entries to check for header values. If the header matches the expected value or thevalueis not specified at the test, the header's value will be saved in the variable.exists, zero or more entries to check if a certain path in the received body exists. If it exists, its value will be saved in the specifiedvariable.regex, zero or more entries to check if a certain path matches the specifiedregexvalue. If the path exists as a leaf and resolves to a single value, which matches the expected one, its value will be saved in the specifiedvariable.exact, zero or more entries for an exact match for a certain path. If the path exists as a leaf and resolves to a single value, which matches the expected one, its value will be saved in the specifiedvariable.body, zero or one entry which will check the entire response body against the template one. If it matches, the body will be saved in the specified variable. Before the match, the body will be processed to include all paths resolved frominclude(0 or more entries) and then exclude all paths resolved fromexclude(0 or more entries) all specified paths. Whatever paths remain in the template and body after this processing will be matched exactly.

When 'paths' are mentioned, these must follow the XPath or JsonPath syntax ( https://github.com/json-path/JsonPath ) .

Test Directive Reference¶

The following defines an alphabetical reference guide to all test directives. Each entry provides complete documentation on how to encode the corresponding directive in XML form.

acquire-mutex

binary-file-comparison

check-cursor-pos

check-post-count

check-screen-buffer

check-variable

clear-screen-buffer

include

log

log-cursor-pos

log-screen-buffer

pause

post-event-sem

read-cursor-pos

read-string

release-mutex

reset-event-sem

rest

run-code

send-special-key

send-text

SFTP

cd

get

lcd

lpwd

ls

mkdir

pwd

put

rename

rm

rmdir

symlink

soap

text-file-comparison

variable

wait-event-sem

wait-for-screen-buffer

acquire-mutex¶

Element Tag

acquire-mutex

Description

Acquires ownership of the mutex semaphore, blocking until that ownership is obtained. The only way this step fails is if it is interrupted while waiting for ownership.

Mutex (or mutual exclusion) semaphores are a tool for synchronizing access to a shared resource between two or more threads. To protect any given resource, make a call to acquire-mutex on the specific accessing thread before that thread reads, writes or otherwise accesses that resource. Any other thread which executes acquire-mutex will block while another thread has ownership. When access to the resource is finished, the thread must call release-mutex to relinquish its ownership. If there are threads blocked waiting for ownership at the time it is released, one of those threads will be unblocked and will obtain ownership before continuing.

The contract of the acquire-mutex is that it will not return until the current thread has ownership of the semaphore. That means it may block an indefinite amount of time. If no thread has ownership of the semaphore on invocation of acquire-mutex, then the calling thread will be given ownership and the call will immediately return without blocking.

Recursion is allowed and if acquire-mutex is called multiple times before release-mutex, then the use counter of the semaphore will be incremented but otherwise acquire-mutex will immediately return (because by definition, if acquire-mutex has been previously called without a corresponding release-mutex, then the current thread already owns the semaphore). To release ownership, the same number of release-mutex calls must occur as there were acquire-mutex. In other words, it is very important to pair up all calls to acquire-mutex with a corresponding call to release-mutex. Each release-mutex call decrements the use count. When the use count reaches zero, the ownership of the semaphore will be released (and another waiting thread, if any, will be unblocked and acquire ownership).

Mutex semaphores are created using a <create-mutex> element in a <resource> element inside a test plan or a specific test set. See Test Plan Definition for more details.

Contained Element(s)

none

Containing Element(s)

test

Attributes

| Name | Description | Required |

|---|---|---|

| name | A text string with the case-insensitive name of the semaphore. | Y |

| repeat | A positive integer specifying the number of sequential duplicate instances of this step to include in the test. For example. if "5" is specified, then the step will be executed 5 times sequentially (and each step will be treated as a separate and independent step). If the 3rd repetition fails, then the reports will show that the first two instances succeeded and the 3rd failed and the last two were not run. This is a load-time construct that is not specific to this directive. The loading process will duplicate the step in the in-memory test definition and then each step (though loaded from the same definition) will be treated separately at runtime. |

N (defaults to 1) |

Example(s)

<acquire-mutex name="myMutexSemaphore" />

binary-file-comparison¶

Element Tag

binary-file-comparison

Description

Compare the contents of two binary files to determine if each matching index position in the stream they are the same bytes. Excluded regions (ranges of bytes specified by start and end index positions that are relative to the beginning of the file) can be specified. Any data in excluded regions is not compared (it is assumed to be the same).

The comparisons are done on a byte for byte basis. The data is not read as characters nor is the data interpreted in any manner. Control characters and other non-visible characters are treated exactly the same as any other character, since there is no distinction.

The baseline file is the captured file that defines the expected data. The "actual" file is usually something generated as a result of input processing on the server.

This step will fail at the first byte that is different between the baseline and actual files, outside of exclusion regions.

The actual file can be specified with a local file name (using the actual attribute) or it can be specified as a remote file on the target system (using the remote attribute). In remote mode, the file specified is either an absolute path on the server side or it is a relative path based on the current directory on the server side. The specified server side file will be copied from that system to the local system (in the output directory) using SFTP.

If the actual file is specified as a local file, then the file will have to have been transferred there via some previous processing during the test cycle. The SFTP test directives can be used for this purpose.

If the baseline file or the actual/remote file cannot be found or the filenames don't reference files (e.g. a name references a directory), this step will fail. Likewise, any I/O failure during step processing will fail the step.

Exclusion regions are specified by 0 or more sub-elements of the following form:

<exclude start="100" end="102" />

The start and end indexes are relative to the beginning of the file and are 0-based. If there are 0 exclude sub-elements, then there are no excluded regions and every byte of the files must match.

If one of the comparison files is larger than the other file, then the step will fail without even comparing the data since by definition the contents must be different somewhere.

Contained Element(s)

exclude

Containing Element(s)

test

Attributes

| Name | Description | Required |

|---|---|---|

| actual | The local filename of the file to compare against the baseline. If specified, the file is assumed to exist and to have been explicitly copied from the target system to the specified file before this step executes. It is the responsibility of the test developer to ensure that the file exists. | N except that at least one (and only one) of the actual or remote attributes MUST be present |

| baseline | This specifies the absolute or relative filename of the file that contains the expected bytes. This file is usually pre-captured and is stored along with test definitions. The project index "baseline" search path is a list of paths to search for baseline files. The ProjectIndex.findBaseLine() method is used for that search. See Project Index Definition for more details. | Y |

| repeat | A positive integer specifying the number of sequential duplicate instances of this step to include in the test. For example. if "5" is specified, then the step will be executed 5 times sequentially (and each step will be treated as a separate and independent step). If the 3rd repetition fails, then the reports will show that the first two instances succeeded and the 3rd failed and the last two were not run. This is a load-time construct that is not specific to this directive. The loading process will duplicate the step in the in-memory test definition and then each step (though loaded from the same definition) will be treated separately at runtime. |

N (defaults to 1) |

| remote | The file name on the target system (the server) which contains the data to be compared against the contents of the baseline. This file will be downloaded at step execution via SFTP from the target system to the local system. It will be stored in the download directory of the results (output directory) which will be interrogated from the project index via ProjectIndex.getDownloadDirectory(). See Download Directory for more details. | N except that at least one (and only one) of the actual or remote attributes MUST be present |

Example(s)

Compares two local binary files (some previous step must have created/copied the actual file to the proper location.

< binary-file-comparison baseline="test5/details_rpt.out" actual="results/download/test5_details_rpt.out" />

Compares a local binary file (the baseline) with a file on the target system (which is downloaded via SFTP at step execution).

< binary-file-comparison baseline="test5/details_rpt.out" remote="test5_details_rpt.out" />

Same as the previous example, except that certain byte ranges are excluded from the comparison.

< binary-file-comparison baseline="test5/details_rpt.out" remote="test5_details_rpt.out"> <exclude start="399" end="399" /> <exclude start="409" end="457" /> </binary-file-comparison>

check-cursor-pos¶

Element Tag

check-cursor-pos

Description

Compare the current cursor position (row and/or column) against a given expected row and/or column. Fails if the compared values don't match.

All row and column indexes are 0-based. The coordinate system is based on the upper left corner of the screen. So (0,0) is the first column of the first row of the terminal which is also the upper left character on the display.

Contained Element(s)

none

Containing Element(s)

test

Attributes

| Name | Description | Required |

|---|---|---|

| column | A 0-based integer index specifying the column number to compare against. Use -1 to disable column comparisons. | N except that at least one of the row/column attributes MUST be present (defaults to -1) |

| repeat | A positive integer specifying the number of sequential duplicate instances of this step to include in the test. For example. if "5" is specified, then the step will be executed 5 times sequentially (and each step will be treated as a separate and independent step). If the 3rd repetition fails, then the reports will show that the first two instances succeeded and the 3rd failed and the last two were not run. This is a load-time construct that is not specific to this directive. The loading process will duplicate the step in the in-memory test definition and then each step (though loaded from the same definition) will be treated separately at runtime. |

N (defaults to 1) |

| row | A 0-based integer index specifying the column number to compare against. Use -1 to disable column comparisons. | N except that at least one of the row/column attributes MUST be present (defaults to -1) |

Example(s)

Fail if the actual cursor row position is not on the 24th row (bottommost of the terminal if the terminal height is 24 lines).

<check-cursor-pos row="23" />

Fail if the actual cursor column position is not in the sixth column.

<check-cursor-pos column="5" />

Fail if the actual cursor row and column position is not in the upper left corner.

<check-cursor-pos row="0" column="0" />

check-post-count¶

Element Tag

check-post-count

Description

Compare the post count of the named event semaphore and fail if the actual post count is not greater than the expected post count.

Event semaphores provide safe signalling and coordination of a specific event across multiple threads. It is a concurrency primitive.

Event semaphores are created using a <create-event-sem> element in a <resource> element inside a test plan or a specific test set. See Test Plan Definition for more details. When created, an event semaphore can be in a "set" state (threads that call the wait-event-sem will block) or it can be in a "posted" state (the event has occurred and any threads that call wait-event-sem will immediately return).

The event can be triggered by calling post-event-sem which will release any currently waiting threads (threads blocked in wait-event-sem). Posting can occur 0 or more times before the state is reset via reset-event-sem. Reset causes the semaphore's state to be "set" again, no matter whether it was previously in a set or posted state. A post count is kept which is the number of times the event has been posted since the last reset. Each reset clears the post count to zero.

Contained Element(s)

none

Containing Element(s)

test

Attributes

| Name | Description | Required |

|---|---|---|

| name | A text string with the case-insensitive name of the semaphore. | Y |

| repeat | A positive integer specifying the number of sequential duplicate instances of this step to include in the test. For example. if "5" is specified, then the step will be executed 5 times sequentially (and each step will be treated as a separate and independent step). If the 3rd repetition fails, then the reports will show that the first two instances succeeded and the 3rd failed and the last two were not run. This is a load-time construct that is not specific to this directive. The loading process will duplicate the step in the in-memory test definition and then each step (though loaded from the same definition) will be treated separately at runtime. |

N (defaults to 1) |

| expected | An integer specifying the post count which must be exceeded for the step to pass. A negative expected value will always pass since the post count is either 0 ("set" state) or it is some positive number ("posted" state). | N (defaults to -1) |

Example(s)

Fail if the semaphore is still "set" (has never been posted).

< check-post-count name="myEventSem" expected="0" />

Fail if the semaphore has not been posted at least 3 times.

< check-post-count name="myOtherEventSem" expected="2" />

check-screen-buffer¶

Element Tag

check-screen-buffer

Description

Compare the current terminal's screen with previously captured screen content (the "expected" contents or "baseline").

This can operate in 2 modes wait mode and non-wait mode.

In wait mode, the comparion processing will wait until the described screen becomes available OR the maximum timeout has occurred. This means that even if a screen is already there to check, if it doesn't match this directive will wait up to a maximum milliseconds value for the matching screen to become available. In this mode, there can never be a failure before the maximum timeout occurs, since it will just continue to wait and recheck.

In non-wait mode, a single check is made against the first screen found and if that check fails, the directive fails. The milliseconds value is simply the maximum milliseconds to wait for there to be ANY screen to check. In this mode, it is important to have previously used the clear-screen-buffer directive to allow a new screen to be detected. If the terminal's screen buffer has not been cleared, then so long as any output has ever been received by the harness, there will be a screen to check and the check will not wait at all.

If a timeout or interruption occurs before a screen becomes available or (in wait mode) before the expected screen becomes available, this directive will fail.

There may also be a rectangle definition (in attributes) in the check-screen-buffer element. This rectangle defines the "absolute" rectangle of the terminal screen which will be compared with the baseline data AND it defines the row/column offset at which the upper left portion of the baseline screen text is mapped for comparison. These are just 2 sides of the same idea. If an entire screen is being compared, then this rectangle must also be set to the terminal size (and positioned at (0,0) coordinates. If a partial screen is being compared, then the size of the rectangle will be smaller than the terminal screen and the coordinate position MAY be anywhere that is less than (TERMINAL_WIDTH - RECTANGLE_WIDTH, TERMINAL_HEIGHT - RECTANGLE_HEIGHT) - including (0,0) of course.

The coordinate system uses indexes that are 0-based and the (0,0) coordinate is the character in the upper left corner of the screen. In other words, the topmost and leftmost character is (0,0).

In comparing the baseline data to the actual screen data, the characters themselves are compared for equivalence. The encoding of the XML files and/or any screen capture text files is of critical importance. If the character data is encoded in one character set and then read back in a Java enironment with a different encoding, then the data will very likely be mismatching and the results will fail badly. In addition, it is expected that many screens will have varying data in certain locations which will naturally differ from the baseline data. For example, date or timestamps cannot be compared between captured and actual screens. To resolve this, a set of exclusion regions can be defined. Any character that is inside any of the exclusion regions (which is just a list of rectangles) is ignored (not compared) for the purposes of screen comparisons. The result of the step will depend only on data that is inside the overall comparison rectangle but not in any exclusion region.

Specifying Screen Text:

The screen text can be thought of as a 2 dimensional array of characters with the first dimension as the "y" and the second dimension as the "x". The actual screen data is read from the terminal's screen buffer. But to compare against the actual, the step must have the proper baseline screen data.

There are 3 ways to define the expected screen text.

1. In a separate file which is referenced using the filename attribute. This is simple to code into the XML, but it requires a separate file to be created. The easiest way is to cut and paste the rectangle of text from a terminal emulator into a text file. Each line in the file will be read into the screen definition as a separate row of the screen data. The order will be top to bottom just as it would normally appear on the screen. The newlines are dropped and all other data is copied into the screen baseline. Do NOT add extra lines to the end of the file. Do NOT leave extra whitespace at the end of the line. Minimize the contents to the smallest rectangle that will show all portions of the screen that need to be matched. Use exclusion regions to ignore other portions, but it is a good practice to "blank out" those portions (overwrite characters that will be excluded with the same exact number of spaces). This makes it easier to see the data that is going to be compared, though it has no impact on the overall comparison. This is the best approach for comparing entire screens or large portions of screens.

As a good practice, it may make sense to "blank" out unnecessary contents of a screen capture in the text file. To "blank" something, just replace the characters with an equivalent number of space characters. This is useful for any data which is being excluded AND which has no documentation value in the screen capture. This is opposed to a case where the text being ignored is a date, time, transaction number or some other data that is normally part of the screen but which is naturally varying, then leaving it (not blanked) will help others in the future who look at the screen. They will quickly see the exclusion region and understand what the data is that is being ignored.

The place where it is useful to blank it is when the data is "garbage" from some prior screen that was captured but has no real value in understanding the exclusion region. The idea is only to maximize the readability of the screen files. In practice, whatever you specify as an exclusion region is fully ignored (it isn't compared at all). The code that honors exclusions is in ScreenRegion.java.

2. In a set of one or more sub-elements with the element tag line. Each line element must have a single value attribute which contains the left justified text of that row of screen data. The entire list of lines is read into memory with the first line element being the top row of the baseline screen and the last line element being the bottom row of the baseline screen. This is useful for a small screen rectangle, but it has the drawback of requiring certain characters to be encoded as XML entities (e.g. the < or & characters).

3. As a simple string using the value attribute (and optionally positioning that string somewhere on the screen using the row and/or column attributes. The row and column values default to 0 and 0. But if either is specified, this sets the row and the leftmost column for the comparison. Since a string is inherently a single row concept (don't try to use newlines in the string), the ending row is always the same as the starting row. The width of the string is known so the rightmost column is automatically calculated. This is the only method of specifying screen text that does not require a complete rectangle definition as part of the check-screen-buffer element since the ending row and ending columns can always be calculated. This is useful for clean and quick definitions of a partial row of text, but it has the drawback of requiring certain characters to be encoded as XML entities (e.g. the < or & characters).

Please note that in all 3 methods, the baseline data being compared is only the text itself. Colors and terminal attributes (underlines, blinking, reverse highlights...) are NOT captured and NOT compared at this time. If you cut and paste from a terminal, normally you will only see the text and the other attributes are missing. You may find portions of the screen that are only spaces that are drawn with a particular color/terminal attribute. For example, a some entry fields in character applications draw with the underline attribute using spaces for the output character.

Defining Rectangles:

Rectangles are used to define the portion of the screen being compared as well as any exclusion regions to be ignored.

If not otherwise specified, the rectangle will be created with the terminal's screen size. Any specified attributes will override the associated defaults for that same coordinate of the rectangle, but any unspecified attributes will still be defaulted.

There are 3 ways to specify a rectangle via attributes:

1. Reference to a well known "named" rectangle. This is accomplished by a named-rectangle attribute. The named rectangle will be looked up using ProjectIndex.getRectangle(). See Project Index Definition for more details.

2. Explicit Coordinates (by specifying the upper-left and lower-right points). Attribute names: left, right, top, bottom. Example:

<check-screen-buffer filename="my_screen.txt" wait="true" millis="5000" left="3" right="70" top="0" bottom="5" />

3. Relative Coordinates (by specifying the starting row/column and a height and width). Attribute names: row, column, height, width. Example:

<check-screen-buffer filename="my_screen.txt" wait="true" millis="5000" row="0" column="3" height="6" width="68" />

The above order is the order in which a rectangle is loaded. If there is a named-rectangle attribute, the first attempt is to find a named rectangle. If that fails, explicit coordinates are checked. Between options 2 and 3, preference is given to the explicit coordinates approach. If any of the attributes for that approach exist, then that is the method used. This means that any relative coordinates attributes that exist would be ignored in that case.

The origin of any rectangle is zero-based and (0,0) is the upper-left corner of the screen.

Specifying Exclusion Regions:

A list of 0 or more exclusion regions can be specified. To specify an exclusion region, use the exclude sub-element like this:

<exclude named-rectangle="rect_name" /> <exclude left="0" right="5" top="17" bottom="23" /> <exclude row="17" height="7" column="0" width="6" />

Note that any form of rectangle definition can be used in an exclusion definition. The list of exclusions can mix and match rectangle definitions, without any issue.

Contained Element(s)

line

exclude

Containing Element(s)

test

Attributes

| Name | Description | Required |

|---|---|---|

| bottom | Part of an "explicit coordinates" rectangle definition. The 0-based integer number of the row that is closest to the bottom of the screen. The origin is in the upper left of the screen so bottom is a larger number (or equal if the rectangle only covers a single row) than top. | N (defaults to one less than the screen height) |

| column | Part of a "relative coordinates" rectangle definition. The 0-based integer number of the leftmost column. To find the rightmost column number, add (height - 1) to the column value. | N (defaults to 0) |