-

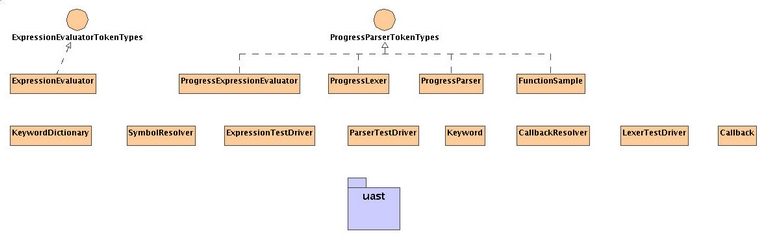

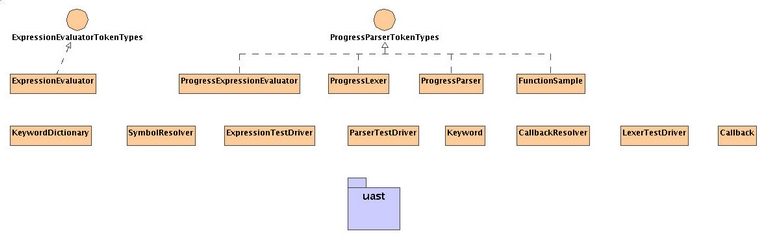

Interface Summary Interface Description ExpressionEvaluatorTokenTypes HintsConstants Constant values for the XML grammar used for hints.JavaTokenTypes Provides a complete set of token types sufficient to represent any Java program in an AST form.ProgressParserTokenTypes SymbolResolver.SchemaHelper<V> Functional interface for use with lambdas.TokenDataWrapper A simple wrapper interface to access basic token data in a wrappered object. -

Class Summary Class Description AstGenerator Returns an AST for a specified Progress source file by creating this AST (using the preprocessor and parser) or by loading an already existing persisted AST from a file.AstKey A container for an annotated AST which can be used as a hashable key in a hash map or hash set.Callback Encapsulates the data necessary to make a static or instance method call through the services provided byjava.lang.reflect.CallbackResolver Provides a mechanism for storage and retrieval of method callbacks as represented by theCallbackCallGraphGenerator Special-purpose driver for the pattern engine to create a new call graph for one or more root entry points.CallGraphHelper Common support routines for call graph processing.CallGraphWorker Provides a pattern engine service to create a new call graph based on matches found during AST processing.ClassDefinition Contains a dictionary for each type of class resource and provides methods to maintain and lookup in the respective namespaces.ClassDefinition.MemberData Stores a member's data.DotKludgeReader Implements a simple input reader based on an underlying input reader, which logically appends the dot character to the underlying input in case the latter terminates with anything else.DotKludgeStream Implements a simple input stream based on an underlying input stream, which logically appends the dot character to the underlying input stream in case the latter terminates with anything else.ExpressionEvaluator Recursively walks an AST generated from a valid Progress 4GL expression and evaluates each node to obtain the final result of the expression which is returned to the caller as aBaseDataType(wrapper).ExternalProcNodeFilter This class name can be passed to thePatternEnginevia thegraph-node-filterconfiguration parameter.FrameAstKey Objects of this class are used as keys for a hashtable that match frame definitions with compatible structure that can be used by shared frames partitions.Function A simple wrapper class to contain all related data about a function.FunctionSample Provides an example of how to implement user-defined functions and variables while using the standard Progress expression evaluator on a given Progress 4GL expression.GenerateClassMap Generates the Class Map file by searching for OO 4GL class files in a given set of paths.JavaAst Provides symbolic token name lookups that are specific to the Java AST, all other function is implemented in the super class.JavaPatternWorker A pattern worker that provides Java AST services and conversion of Java language token names (as defined by theJavaTokenTypes) to their integral types.JavaPatternWorker.WorkArea Context local work area.JavaSymbolResolver Provides symbol resolution services for Java ASTs.Keyword Encapsulates all data associated with a language keyword.KeywordDictionary Provides a mechanism for storage and retrieval of language keywords as represented by theKeywordLexerDumpFilter Provides a simple mechanism to run the ProgressLexer on a given Progress 4GL source file and to save the resulting stream of tokens while still servicing the needs of the ProgressParser.ProgressAst Provides symbolic token name lookups that are specific to the Progress AST, all other function is implemented in the super class.ProgressLexer Tokenizes a Progress 4GL source file (input as a stream of characters) into a stream of tokens suitable for theProgressParser.ProgressParser Creates an Abstract Syntax Tree (AST) representation of a Progress 4GL source file from an input stream of tokens (provided by theProgressLexerProgressPatternWorker A simple implementation of a pattern worker whose purpose is to convert Progress language token names (as defined by theProgressParser) to their integral types.ProgressStatsHelper Helper to copy and link to Progress 4GL source code.RootNodeList Reads a project-specific list of root entry point procedures.ScanDriver Provides a simple harness to scan a user-defined list of files and drive preprocessing and parsing of each file.SymbolResolver Contains a dictionary for each type of language namespace and provides methods to maintain and lookup in the respective namespaces.SymbolResolver.SearchResult Store the data resulting from a given file system search.SymbolResolver.UsingSpec Store the data in a USING statement.SymbolResolver.WorkArea Container with context-local data.UastHints Loads hints information from a UAST hints XML file into memory and exposes the data in an easily accessible format.UastHintsWorker Provides a pattern engine service to read string, long, double and boolean values using a file-unique key.Variable A simple wrapper class to contain all related data about a variable. -

Enum Summary Enum Description ClassDefinition.DataStoreType Data store identifier.SymbolResolver.UsingType Describes the type of the USING specification.

Package com.goldencode.p2j.uast Description

rovides a lexer, parser, call tree processing and symbol resolution to

create an intermediate form representation (unified abstract syntax

tree or UAST) of a Progress 4GL source tree in a manner that allows

easy programmatic tree walking and manipulation for the purposes of

inspection, analysis, interpretation, translation and conversion.

Lexer

Parser

Call Tree

The term "parser" refers to software that takes the stream of meaningful tokens (provided by the lexer) and performs a syntax analysis of the contents based on its knowledge of the source language. The result of running a parser is a determination of the syntactical correctness of the input stream and a list of the language "sentences" that comprise the source. In other words, while the lexical analyzer operates on the input stream to create a sequential list of the valid tokens, the parser reassembles these tokens back into "sentences" that have meaning that can be evaluated or executed.

To illustrate, if our source file contains the following Progress 4GL source code:

/* this is a

sample program */

MESSAGE

"Hello World!".

Then the lexical analyzer might (the exact output depends on the exact implementation) generate the following list:

/* this is a sample program */

MESSAGE

"Hello World!"

.

You can see that white space (spaces, tabs, new lines) has been removed. Each token is an atomic entity that can be considered on its own or as a part of a larger language specific "sentence" that can be evaluated.

The parser would take this list and piece things back together into a list of sentences that are syntactically correct and are meaningful for evaluation. This is the flat view of the result (a "degenerate tree"):

/* this is a sample program */

MESSAGE "Hello World!".

Typically, these resulting sentences will be provided in a tree structure, sometimes called an intermediate form representation or an abstract syntax tree (AST). The resulting tree might look something like the following:

external_program

|

+--- comment (/* this is a sample program */)

|

+--- statement

|

+--- keyword_message (MESSAGE)

|

+--- string_expression ("Hello World!")

|

+--- dot (.)

Note that the parenthesized text is the original text from the source file. Each tree node is artificially manufactured, having a specific defined "token type" which can be referenced in code that walks the tree.

Client code that uses the output of the parser can walk the tree and inspect, analyze, interpret, transform or convert it as necessary. For example, the tree structure naturally puts expressions in RPN (reverse polish notation), otherwise known as postfix notation. This is required to properly evaluate the expression (and generate results with the correct precedence). This is significantly easier than the interpretation or evaluation of expressions using the human-readable infix notation.

By using a common lexical analyzer and parser, the tricky details of the Progress 4GL language syntax can be centralized and solved properly in one location. Once handled properly, all other elements of the conversion tools can rely upon this service and focus on their specific purpose.

Parser and lexer generators have reached a maturity level that can easily create results to meet the requirements of this project. This creates a savings of considerable development time while generating results that have a structure and level of performance that meets or exceeds the hand-coded approach.

In a generated approach, it is important to ensure that the resulting code is unencumbered from a license perspective.

The current approach is to use a project called ANTLR. This is a public domain (it is not copyrighted which eliminates licensing issues) technology that has been in development for over 10 years. It is used in a large number of projects and seems to be quite mature. It is even used by one of the Progress 4GL vendors in a tool to beautify and enhance Progress 4GL source code (Proparse). The approach is to define the Progress 4GL grammar in a modified Extended Backus-Naur Form (EBNF) and then run the ANTLR tools to generate a set of Java class files that encode the language knowledge. These resulting classes provide the lexical analyzer, the parser and also a tree walking facility. One feeds an input stream into these classes and the lexer and parser generate an abstract syntax tree of the results. Analysis and conversion programs then walk this tree of the source code for various purposes. There are multiple different "clients" to the same set of ANTLR generated classes. Each client would implement a different view and usage of the source tree. For example, one client would use this tree to analyze all nodes related to external or internal procedure invocation. Another client would use this tree to analyze all user interface features.

P2J uses ANTLR 2.7.4. This version at a minimum is required to support the token stream rewriting feature which is required for preprocessing.

Some useful references (in the order in which they should be read):

Building Recognizers By Hand

All I know is that I need to build a parser or translator. Give me an overview of what ANTLR does and how I need to approach building things with ANTLR

An Introduction To ANTLR

BNF and EBNF: What are they and how do they work?

Notations for Context Free Grammars - BNF and EBNF

ANTLR Tutorial (Ashley Mills)

ANTLR 2.7.4 Documentation

EBNF Standard - ISO/IEC 14977 - 1996(E)

Do not confuse BNF or EBNF with Augmented BNF (ABNF). The latter is defined by the IETF as a convenient reference to their own enhanced form of BNF notation which they use in multiple RFCs. The original BNF and the later ISO standard EBNF are very close to the ABNF but they are different "standards".

Golden Code has implemented its own lexical analyzers and parsers before (multiple times). A Java version done for the Java Trace Analyzer (JTA). However, the ANTLR approach has already built a set of general purpose facilities for language recognition and processing. These facilities include the representation of an abstract syntax tree in a form that is easily traversed, read and modified. The resulting tree also includes the ability to reconstitute the exact, original source. These facilities can do the job properly, which makes ANTLR the optimal approach.

The core input to ANTLR is an EBNF grammar (actually the ANTLR syntax is a mixture of EBNF, regular expressions, Java and some ANTLR unique constructs). It is important to note that Progress Software Corp (PSC) uses a BNF format as the basis for their authoritative language parsing/analysis. They even published (a copyrighted) BNF as 2 .h files on the "PEG", a Progress 4GL bulletin board (www.peg.com). In addition, the Proparse grammar (EBNF based and used in ANTLR) can also be found on the web. Thus it is clear that EBNF is sufficient to properly define the Progress 4GL language and that ANTLR is an effective generator for this application. This is the good news.

However, there is a very important point here: the developers have completely avoided even looking at ANY published Progress 4GL grammars so that our hand built grammar is "a clean room implementation" that is 100% owned by Golden Code. The fact that they published these on the Internet does not negate their copyright in the materials, so these have been treated as if they did not exist.

An important implementation choice must be noted here. ANTLR provides a powerful mechanism in its grammar definition. The structure of the input stream (e.g. a Progress 4GL source file) is defined using an EBNF definition. However, the EBNF rules can have arbitrary Java code attached to them. This attached code is called an "action". In the preprocessor, we are deliberately using the action feature to actually implement the preprocessor expansions and modifications. Since these actions are called by the lexer and parser at the time the input is being processed, one essentially is expanding the input directly to output. This usage corresponds to a "filter" concept which is exactly how a preprocessor is normally implemented. This is also valid since the preprocessor and the Progress 4GL language grammars do not have much commonality. Since we are hard coding the preprocessor logic into the grammar, reusing this grammar for other purposes will not be possible. This approach has the advantage of eliminating the complexity, memory footprint and CPU overhead of handling recursive expansions in multiple passes (since everything can be expanded inline in a single pass). The down side to this approach is that the grammar is hard coded to the preprocessor usage and it is more complicated since it includes more than just structure. This makes it harder to maintain because it is not independent of the lexer or parser.

The larger, more complex Progress 4GL grammar is going to be implemented in a very generic manner. Any actions will only be implemented to the extent that is necessary to properly tokenize, parse the content or create a tree with the proper structure. For example, the Progress 4GL language has many ambiguous aspects which require context (and sometimes external input) in order to properly tokenize the source. An example is the use of the period as both a database name qualifier as well as a language statement terminator. In order to determine the use of a particular instance, one must consult a list of valid database names (which is something that is defined in the database schema rather than in the source code itself). Actions will be used to resolve such ambiguity but they will not be used for any of the conversion logic itself. This will allow a generic syntax tree to be generated and then code external to the grammar will operate on this tree (for analysis or conversion). In fact, many different clients of this tree will exist and this allows us to implement a clean separation between the language structure definition and the resulting use of this structure.

The following is a list of the more difficult aspects of the Progress 4GL:

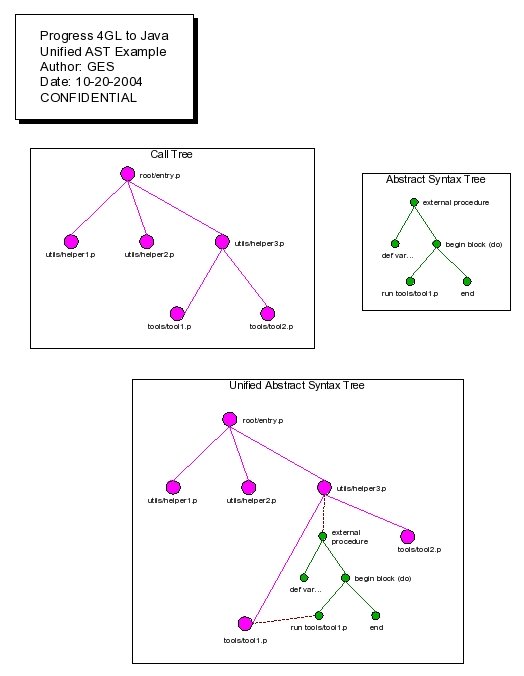

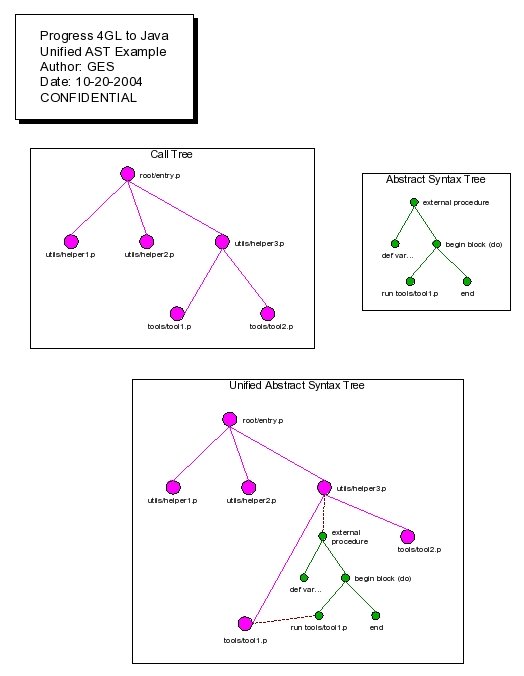

Since most Progress 4GL procedures call other Progress 4GL procedures which may reside in separate files, it is important to understand all such linkages. This concept is described as the "call tree". The call tree is defined as the entire set of external and internal procedures (or other blocks of Progress 4GL code such as trigger blocks) that are accessible from any single "root" or application entry point. It is necessary to process an entire Progress 4GL call tree at once during conversion, in order to properly handle inter-procedure linkages (e.g. shared variables or parameters), naming and other dependencies between the procedures.

One can think of these 2 types of trees (abstract syntax trees and the call tree) as 1 larger tree with 2 levels of detail. The call tree can be considered the higher level root and branches of the tree. It defines all the files that hold reachable Progress 4GL code and the structure through which they can be reached. However as each node in the call tree is a Progress 4GL source file, one can represent that node as a Progress 4GL AST. This larger tree is called the "Unified Abstract Syntax Tree" (Unified AST or UAST). Please see the following diagram:

By combining the 2 levels of trees into a single unified tree, the processing of an entire call tree is greatly simplified. The trick is to enable the traversal back and forth between these 2 levels of detail using an artificial linkage. Thus one must be able to traverse from any node in the call tree to its associated Progress 4GL AST (and back again). Likewise one must be able to traverse from AST nodes that invoke other procedures to the associated call tree node (and back).

Note that strictly speaking, when these additional linkage points are added the result is no longer a "tree". However for the sake of simplicity it is called a tree.

This representation also simplifies the identification of overlaps in the tree (via recursion or more generally wherever the same code is reachable from more than 1 location in the tree).

To create this unified tree, the call tree must be generated starting at a known entry point. This call tree will be generated by the "Unified AST Generator" (UAST Generator) whose behavior can be modified by a "Call Tree Hints" file which may fill in part of the call tree in cases where there is no hard coded programmatic link. For example, it is possible to execute a run statement where the target external procedure is specified at runtime in a variable or database field. For this reason, the source code alone is not deterministic and the call tree hints will be required to resolve such gaps.

Note that there will be some methods of making external calls which may be indirect in nature (based on data from the database, calculated or derived from user input). The call tree analyzer may be informed about these indirect calls via the Call Tree Hints file. This is most likely a manually created file to override the default behavior in situations where it doesn't make sense or where the call tree would not be able to properly process what it finds.

The UAST Generator will create the root node in the call tree for a given entry point. Then it will call the lexer/parser to generate a Progress 4GL AST for that associated external procedure (source file). An "artificial linkage" will be made between the root call tree node and its associated AST. It will then walk the AST to find all reachable linkage points to other external procedures. Each external linkage point represents a file/external procedure that is added to the top level call tree and "artificial" linkages are created from the AST to these call tree nodes. Then the process repeats for each of the new files. They each have an AST generated by the lexer/parser and this AST is linked to the file level node. Then additional call tree/file nodes will be added based on this AST (e.g. run statements...) and each one gets its AST added and so on until all reachable code has been added to the Unified AST.

This resulting Unified AST is built based on input that is valid, preprocessed Progress 4GL source code.

For a more detailed diagram, use this link.

One of the more complicated aspects of the lexer is th implementation of language keywords (and their associated abbreviation support). Please see the following reference for why the preprocessor and parser don't implement keyword abbreviation support instead of the lexer.

Some features that may be expected to be implemented in the lexer are actually implemented in the preprocessor. This is done for 2 reasons:

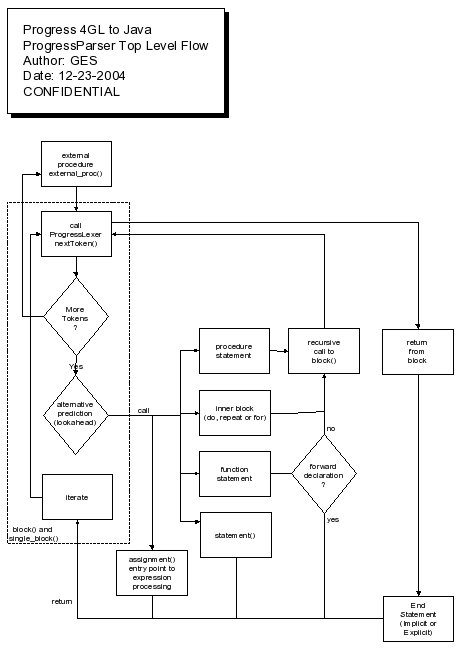

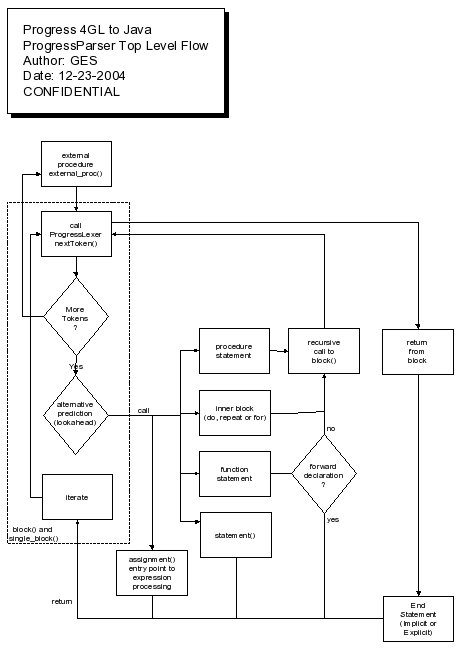

The primary job of the parser is to take the token stream (represented by the Lexer) and match that with the proper sets of rules that converts the flat stream of tokens into a 2 dimensional tree (AST). In addition, there are many ambiguities of the Progress language that could not be resolved at the lexer level since the solution requires knowledge of the parser's context. Due to issues in how the generated code handles lookahead, it is not feasible to share state between the lexer and parser (thus providing feedback to the lexer at the time that the token is created). Instead the parser must rewrite the tokens based on its context. Please see the ProgressLexer overview for more details.

The following sections describe the most critical issues facing the parser. At the end of each subsection is a list of links that should be reviewed to obtain more details. At the end is the implementation plan for the completion of the ProgressParser. Please see this link for a top-level overview of the parser.

Data type support is primarily a function of providing the correct token types for each data type and resolving lvalues (variables and fields form the schema) and function calls to the correct data types. This enables expressions, assignments and other data type specific parsing to occur.

Special abbreviation processing is implemented in the processing of the AS clause since the documented abbreviations (or lack thereof) are not followed in this case. See the table above for the real minimum abbreviations of the data type names.

Variable and field types can optionally be specified as single dimensional arrays (multi-dimensional arrays are not supported in Progress 4GL). The syntax for specifying an array relies upon the use of the EXTENT keyword in a DEFINE VARIABLE statement or in the data dictionary. When defining variables, the INITIAL keyword can be used to with the [,,,] array initializer syntax to provide a list of initial values. If there are fewer values than array elements, then the last value is used to initialize into all remaining uninitialized array elements. In addition the LABEL keyword can be used with the <string>, <string>... syntax to provide separate labels for each element in an array.

To reference an array element, the [ integer_expression ] syntax is used (contrary to some of the documentation, it does not need to be an integer constant). Whitespace can be placed before the opening left bracket or anywhere inside the brackets. The lvalue rule makes an optional (zero or one) rule reference to the subscript rule. An example reference might be hello[ i + 3 ] where i is an integer variable that holds a value that is positive and less than the array size - 2. All Progress array indices are 1 based (the first element is referenced as array[1]).

The subscript rule implements the subscripting syntax. This subscript rule also implements the range subscripting support using the FOR keyword and a following integer constant to specify the ending point to the integer expression's starting point (e.g. hello[1 for 4] references the first 4 elements of the hello array).

Method and attribute support has been added to the lvalue rule. It is tightly bound to variable and field references and can be optionally present in many locations. At this time, the support is highly generic (the correct resulting data types are not determined).

For more details, see:

A built-in is a symbol defined by the Progress language as a set of keywords that represent language statements, functions, widgets, methods and attributes. These keywords can be either reserved or unreserved. In addition, some keywords can be arbitrarily abbreviated down to any number of characters limited by some minimum number. For example, the DEFINE keyword can be shortened to DEFIN, DEFI, or DEF. Not all keywords can be abbreviated. There are examples of both reserved and unreserved keywords supporting abbreviations.

A user-defined symbol is a programmer created name for some application-specific Progress construct that the programmer has created. The symbol naming in this case is quite flexible. Names can be up to 32 characters long, must start with an alphabetic letter and can then contain any alphanumeric character as well as the characters # $ % & - or _. Reserved keywords cannot be used as user-defined symbols, while unreserved keywords can. Keywords referring to entities of like type will be hidden by a user-defined symbol of that type. For example, it is possible to hide certain built-in functions with a user-defined function of the same name. Once defined in a given scope, the original Progress resource that is hidden cannot be accessed.

Types of Symbols:

Each of these types of symbol must be stored in a namespace. That namespace may contain more than one symbol type, or may be unique to a specific type of symbol.

Each namespace is populated with a new symbol name when the associated entity is defined or (in some cases) declared. Once a name is added to the namespace, it can be used in other source code references as long as the namespace is visible to that code. For example, "define variable" statements must always precede all references to the defined symbol.

Some namespaces are flat and some are scoped. A flat namespace is one in which the same names are visible no matter the context from which a namespace query originates. A scoped namespace is essentially a cooperating stack of namespaces, where a new namespace is added to the top of the stack at the start of a new scope and that top of stack namespace is removed at the end of the scope. Lookups start at the top of the stack and work down the stack until the first matching name is found. This scoped approach allows for symbol hiding where names in one scope can be the same as a name in a different scope, but only the instance that is in the closest scope to the top is what is found.

Local symbols generally hide shared scoped/shared global variables. Local symbols in an internal procedure will hide the same names in an external procedure.

Symbol namespaces are different by type of symbol. There is no unified namespace. One of the implications is that functions and variables of the same name must be differentiated (by context) and cannot hide each other.

Built-in functions and language statements of the same name are differentiated in the parser by context. There is no simple rule for detecting this based on syntax alone since there are many Progress built-in functions that do not take parenthesis (when they have no argument list) and likewise there are language statements that can be followed by a parenthesized expression that would appear syntactically like a function (there can be whitespace between function names and the parenthesized parameter list).

User functions and user variables are differentiated by the presence or absence of (). There is never a valid expression that has an lvalue followed immediately by ( ).

Only internal procedures, functions and triggers add scoping levels for variables. Other types of blocks are scoped to the external procedure level, which is the same as saying that any defined variables inside non-procedure blocks (DO, REPEAT, FOR...) are scoped at the same level as global variables from the external procedure's perspective.

Symbol resolution is the process by which a reference to a particular symbol type is queried from the corresponding namespace. The lookup occurs at the point in the parsing at which the reference is made. This lookup may return critical information that is the associated with the node being built in the AST for that reference. For example, when variable references are found in a namespace, the query returns a token type that is assigned to that tree node (e.g. the token type for the node is converted from a generic SYMBOL to a token type that matches the specific variable data type such as VAR_INT).

The SymbolResolver class encapsulates the various namespaces (a.k.a. dictionaries) that are in use. It provides maintenance and lookup services to the lexer and parser. Lookups are inserted at key locations in the parser. In these locations, the token type of the symbol is usually rewritten. This allows the parser's context to drive the decision on which namespace to use and how to interpret the symbol. This is critical since there is no unified namespace.

One unique namespace is that for language keywords. These are the only symbols that do not require an exact match but instead can be abbreviated. This special namespace is implemented by Keyword and the KeywordDictionary classes. This namespace is shared between the lexer and the parser since the keyword lookup is first done by the lexer's mSYMBOL method. To keep the implementation of the parser and also the preprocessor to the simplest implementation, the lexer is the optimal location for this function. Since the preprocessor is implemented with a minimum of knowledge of the Progress language, to handle the abbreviations there would add significantly more complexity (especially since there is a mixture of reserved and and unreserved keywords). The keyword processing cannot be completely put in the parser because the Progress language is so keyword rich that the parser must already see the tokens as language keywords from the topmost parser entry point (all the way down as it recursively descends the tree). The parser then aggregates the language statements back together based on already knowing exactly which keyword has been found. In other words, the lexer will assign a token type to each token found. This token type is what the parser keys off of in determining how to group the tokens into language statements.

Keeping the abbreviations out of the preprocessor has the advantage of exactly duplicating the Progress preprocessor output (which doesn't expand the abbreviations). This is important to be able to test and prove that the preprocessor is 100% correct/compatible.

For more details, see:

Schema names refer to the following schema entities:

database1 -> table1 ---> field1

-> table2 ---> field1

|

+-> field2

database2 -> table2 ---> field1

-> table3 ---> field1

|

+-> field2

All connected databases contribute to the namespace and can be referenced directly. Within a specific part of the tree (at any containing level such as within a single database or within a single table) each contained name must be unique. But between databases and tables, the contained names can be the same (the text is an exact match) because they live in separate parts of the tree. This is a cause of ambiguity when it is not clear which part of the tree is being referenced, such that multiple matches can be made to the same lookup. This means that there can be inherent ambiguity between:

Such ambiguities are resolved by the following:

The schema namespace is something that is predefined at the start of a procedure. This is due to the Progress requirement that all databases to be used by a procedure must be connected at the time of compilation. This means that the database connection (using the CONNECT statement) must be done using command line arguments when starting Progress, must be manually done in the Data Dictionary before running a procedure or must be programmatically done before running a specific procedure that is dependent upon such a connected database. At the start of a Progress procedure, the schema namespace is defined based on all database, table, field, index and sequence names available in the schemas of all connected databases. To mimic this, the P2J parser will need the same information as extracted from the schema during the schema conversion of all schemas that are in use. In addition, the parser will need to know the names of all connected databases. Both of these features will be provided by the SchemaDictionary class in the schema package. This class will use a persistent configuration file or set of files to obtain this information and the SymbolResolver will hide the instantiation and usage of this class from the parser.

Since these schema names are all known before the procedure runs, there are no preceding language statements that allow us to populate the dictionary as the parsing occurs. Instead, such names can be referenced at any time. This is different than how variables work. All variables that can be accessed by a procedure must have a corresponding "define variable", "define parameter" or "parameter clause" that adds the associated name and type to the variable dictionary. In lookups, this variable dictionary has precedence over the schema dictionary since a variable of the same name as an unqualified field will hide the field (one cannot refer to the field without more explicitly qualifying it).

In order to handle partially or fully qualified names, the lexer was made aware of how to tokenize such symbols. This dramatically reduces the effort since the parser would have to lookahead up to 5 tokens:

symbol_or_unreserved_keyword DOT symbol_or_unreserved_keyword DOT symbol_or_unreserved_keyword

Once a match was found, these tokens would have to be combined into a single token. The logic would be very complicated when one considers that this could be needed for tables or fields. It is made even more complicated by the fact that whitespace is lost in the lexer but is relevant to the resolution of which tokens should be combined into a single resulting token. Implementing this in the lexer is a necessity.

To handle qualified names in the lexer, the mSYMBOL rule was modified. This rule is inherently greedy, which is how Progress works as well. This means that Progress will match the largest possible qualified name that is specified and then do a lookup. A non-greedy approach would try a lookup on the first possible name and then extend the symbol to include any DOT and additional symbol, try the lookup again and so on until a match was found. Other critical assumptions are required to implement this rule:

Of course, the matching is limited to 3 part qualified names (separated by 2 DOTs) so the 3rd dot can will always signal the end of the qualified name even without intervening whitespace.

Once a qualified schema name is matched, the token type is set to DB_SYMBOL. Normal keyword lookup is bypassed for such tokens as we have not found any keyword using a DOT character, nor can we use even a reserved keyword as the start of a new statement without intervening whitespace. This means that we know that this is a database symbol and can set it to this generic type to be resolved later in the parser. Of course, unqualified database/table/field names will not be detected as a database symbol and so these will be tokenized as a SYMBOL or as a keyword if there is a match during keyword lookup. The parser handles the conversion of all of these generic forms into the proper data types (e.g. FIELD_INT, FIELD_CHAR...) based on context.

Complicating the schema name resolution is the Progress feature that some schema names can be arbitrarily abbreviated. Field names and table names in particular can be abbreviated to the shorted unique sequence of leftmost characters that unambiguously match a single entry in the namespace being searched.

All lookups (fields/tables/databases) are done on a case-insensitive basis, just like all other Progress symbols.

Taking this into account, the following are the matching processes:

Database names are looked up using a single level, exact match search of the connected databases. A Progress 4GL compiler error would occur if the name being search for does not exist in this list. No abbreviations or qualifiers are in effect during this search.

Table names are looked up with a 4 step process:

Unqualified field references from any kind of record (table, temp-table, work-table, buffer) will collide if they are non-unique, even if the search was done on an exact match, except in such cases where the associated record is in "scope" making that unqualified name unambiguous.

An exact match with a name always supersedes a conflicting abbreviation.

Note that user-defined variable names cannot be abbreviated and database names cannot be abbreviated. The abbreviation processing is only done for fields and tables.

Buffer names cannot be abbreviated but buffer field names can be abbreviated. Temp-table and work-table names cannot be abbreviated, however temp-table and work-table field names CAN be abbreviated.

Given 2 names cust and customer (which could both be tables or both fields) where one is a subset of the other:

No ambiguity is allowed at runtime, ever. If there is more than 1 match found via any of these matching algorithms, then a compile-time error will occur. This means that all source code read by P2J can be assumed to have 1 and only 1 match. There will never be 0 matches nor will there be 2 or more matches. The primary advantage of this fact is that the logic of any search algorithms is insensitive to the order in which the search is made. This means that the P2J environment can be coded using arbitrary search ordering without affecting the outcome. This is true for unqualified name searches and for partially or fully qualified names.

Since the namespace being used depends upon the databases that are connected when a procedure is called, the only time it is completely safe to use unqualified field names is when a specific record scope is in effect, causing normally ambiguous names to be unambiguous. Although the scoping rules are arcane and poorly documented, they can be relied upon.

The schema namespace is not completely static. Dynamic modification of the namespace is possible by defining buffers, temp-tables and work-tables. All of these are equivalent to a table and have field definitions.

Buffers are equivalent (from a schema perspective) to an already existing table. They just represent a different memory allocation in which a record from the referenced table can be stored. From this perspective, one might think of buffers as aliases for a table, at least from the parser's perspective. In reality, there is more going on of which the parser is unaware.

Buffers can be created, referenced or passed into an external procedure AS WELL AS AN internal procedure AND IN A function. Buffers

can only be referenced within the scope that they are defined, so a buffer defined in an external procedure is accessible until that procedure ends. A buffer defined in an internal procedure or function is inaccessible after that internal procedure or function block ends.

Temp-tables and work-tables are both treated like dynamically adding a table to the schema (not associated with any database). This table can optionally copy the entire schema structure of a current table and/or add arbitrary fields to the definition. It appears there is no way to remove fields from the definition if one bases it upon an existing table.

Temp-tables and work-tables can be created inside an external procedure, a shared instance can be accessed inside an external procedure or an instance can be passed in as a parameter. In all cases, such tables can be referenced from the moment of definition/declaration until the end of that external procedure.

When defining or adding temp-tables, work-tables and buffers, these entities can be scoped only for the duration of a do, for or repeat block. They are always scoped to a procedure or function (buffers only).

Temp-table/work-table and buffer names that are added/defined, will hide an unqualified conflicting table name (such that the only way to

reference that database table is with a fully qualified name).

Within a single external procedure (or internal procedure/function in the case of buffers), you can never define duplicate temp-table,

work-table or buffer names.

It is possible to define a buffer FOR a buffer that refers to a temp-table. However, Progress does not allow a buffer FOR a buffer that refers to a table.

The schema namespace must handle these dynamic changes through methods that are called by the parser in specific rules which implement Progress language statements such as define buffer, define temp-table and define work-table.

Another type of namespace modification is the use of aliases. All connected databases have a "logical database name" that is used to qualify table and field names. By using aliases, one can create additional logical names that are equivalent to the original database name. Any references to the alias are treated as references to the original database name. One trick that is often used is that a database is aliased before running another external procedure that uses the same schema by a different logical name. This allows a common procedure to be used against 2 or more databases as long as the schema are identical or at least compatible in the areas in use. The problem in such tricks is that this is done external to the code being run, so the parser must be externally informed or the schema namespace must be prepared to handle all possible aliases. If there are cases in which the same alias is used for different databases then this may be not be statically defined in a configuration file. This area needs additional definition before it can be implemented.

A record scope is the beginning and ending of a buffer (associated with the table or "record" in question) that can be used to store real rows of data. This scope determines many things such as when data is written to the database or when a new record is fetched. However, from the parser's perspective, the only reason why record scope matters is because it affects unqualified field name resolution.

Progress databases are very likely to have a large number of field names that are duplicated between tables. For this reason, these field names are not unique across the database and thus in normal circumstances, they can only be unambiguously referenced when they are qualified. Wherever there are long lists of field names, it is convenient to allow the more liberal usage of unqualified names. This is possible whenever there are one or more record scopes that are active. In this case, the list of unique unqualified fields can be expanded based on the list of those tables in scope at the time. If multiple tables are in scope, then the set of all field names that are unique across that list of tables in scope will be available for searching even if those names would normally be unavailable due to conflicts with other tables. As long as the conflicts are only with tables that are not in scope, those unqualified names can be used. If however, the same field name is used in 2 or more of the tables that are in scope, that name still needs to be qualified on lookup.

Buffers are referenced via a name. That name can have been explicitly defined in the current external procedure, internal procedure or function using one of the following:

DEFINE BUFFER buffername FOR record

DEFINE PARAMETER BUFFER buffername FOR record

FUNCTION ... (BUFFER buffername FOR record, ...)

If not explicitly defined, then the buffer name must be implicit defined based on a reference to a table name (database table, temp-table or work-table) inside a language statement or built-in function.

Buffers can only be scoped to an enclosing block which has the record scoping property. All block types (external procedures, internal procedures, triggers, functions, repeat and for) except DO have the record scoping property by default. Even a DO obtains record scoping when either the FOR or PRESELECT qualifier is added (these are mutually exclusive).

Internal procedures, triggers and functions additionally have namespace scoping properties such that an explicit definition of a buffer name in one of these blocks will hide the same explicit buffer name in the containing external procedure. However, if no "local" definition exists for an explicit buffer name, references in these contained blocks will cause the original definition in the external procedure to be scoped to the external procedure level itself. Please note that any explicit buffer definitions inside an internal procedure, trigger or function cannot be used outside of that block. The external procedure is different in this respect (this is the same behavior as Progress provides for variables).

If a buffer is not explicitly created, then it is created implicitly via a direct table name reference in the source code. For example, if a table named customer exists in the currently connected database, then any of the following language constructs that reference customer (e.g. create customer) cause a buffer of the same name (customer) to be created. This can be confusing since some language constructs use this same name to reference the schema definition of that table, some use the name to reference the table and some use the name as a buffer. This polymorphic usage is considered an "implicit" buffer.

Whether or not a language construct creates a buffer is determined by the type of the reference. The following maps language constructs to the type of record reference:

There are 4 types of record references (to database tables, temp-tables or work-tables) which may cause a buffer to be created. If this reference is implicit, the resulting buffer is named the same as the table to which it refers. It is important to note that even when a reference does create a buffer (allocate memory) only a small subset of the above statements actually fill it with a record (all buffers only hold a single record at a time). Other statements (e.g. FIND, FOR or GET) may handle both the creation of the buffer and the implicit loading of that buffer with the first record.

The following sections describe each of the reference types. 3 of the 4 types can cause the creation of a new buffer. Multiple references to the same buffer are sometimes combined into a single buffer creation and at other times more than 1 buffer can be created. The following describes the rules by which the number of buffers are determined. For each buffer that is created, there is also a "record scope" that corresponds to this buffer. This scope determines the topmost block in which the buffer is available.

In the following rules, the use of "implicit" should not be confused with whether or not a buffer name has been explicitly or implicitly referenced. In many cases, Progress will implicitly create a larger scope for a buffer such that multiple references share the same buffer. This is the sense in which the word implicit should be interpreted. Most importantly, the scoping rules (both the explicit rules and the implicit expansion rules) are applied in the same exact manner for both explicitly defined buffers as for implicitly defined buffers.

No Reference

Some name references can occur in many language statements, even before these scope creating statements. For example, a DEFINE TEMP-TABLE <temp-table-name> LIKE <table_name> statement is such a reference that can occur anywhere in a procedure. No buffer is being referenced here, instead it is the schema dictionary (the data structure of the record) that is being referenced.

Strong Reference

Only two language statements (both are kinds of block definitions) can be a strong reference (DO FOR and REPEAT FOR). A new buffer is created and that buffer is scoped to the block being defined. Strong scopes are explicitly defined by the 4GL programmer and the Progress environment never implicitly changes the scope to be larger (as can happen with the next two reference types). A strong reference doesn't ever implicitly expand beyond the block that defines it.

Other reference types can be nested inside a strong scope and the scope of these references is always raised to the strong scope. Thus every buffer reference within a strong scoped block is always scoped to the same buffer.

Additionally, no free references to the same buffer are allowed outside the block defining a strong scope (the code will fail to compile). Weak references to the same buffer can be placed outside or inside a strong scope.

Weak Reference

Only three language statements (all of which are kinds of block definitions) can be a weak reference (FOR EACH, DO PRESELECT and REPEAT PRESELECT). Depending on other references (or the lack thereof) in a file, a weak reference may create a new buffer and scope that buffer to the block in which the buffer was referenced.

If a weak reference to a buffer is nested inside a block to which the buffer is strongly scoped, then this reference does not create a new buffer and is simply a reference to that same strongly scoped buffer.

If a weak reference is not nested inside a strong scope, it will create a new buffer and scope that buffer to the block definition which made the weak reference, BUT this will only happen if no unbound free references to the same buffer exist (see Free Reference below for details).

With the existence of one or more unbound free references, it is possible that the weak reference will have its scope implicitly expanded to that of a containing scope. For this to occur, the scope of the buffer will be expanded to the nearest block that can enclose the multiple references to this same buffer. The problem is that there are complex rules to determine which references (weak and free) are combined into a single buffer (and its associated scope) and which weak references are left alone.

Free Reference

Anything that is not a "no reference", strong reference or weak reference is a free reference.

If the free reference is contained inside a block to which the referenced buffer is strongly scoped, then this is simply a reference to that same buffer and no buffer creation or scope change will occur.

If the free reference is contained inside a block to which the referenced buffer is weakly scoped, then this is simply a reference to that same buffer and no buffer creation or scope change will occur. Please note that the Progress documentation incorrectly states that "a weak-scope block cannot contain any free references to the same buffer". More specifically, one cannot nest certain free references to a given buffer that is scoped to a FOR EACH block (which is the most common type of weak reference). For example, one cannot use a FIND inside a FOR EACH where both are operating on the same buffer. However, one should note that field access is a form of free reference and this is perfectly valid (as one would expect) inside a FOR EACH.

Contrary to the Progress documentation, if the weak reference is created by a DO PRESELECT or REPEAT PRESELECT, then it is required that one use one or more free references in order to read records since neither of these blocks otherwise provide record reading. In this case, even FIND statements work within such a block.

If the free reference is not nested inside a block to which the same buffer is already scoped, then this free reference may cause the scope of other free references and weak references to be implicitly expanded to a higher containing block.

In two cases (FOR FIRST and FOR LAST), a free reference is part of a block definition. In such a case, if there is no need to implicitly expand the scope for this buffer, it will be scoped to the block definition in which the free reference was made.

Much more common is for a free reference to be a standalone language statement which is not part of a block definition (e.g. any form of FIND). In such cases, whether a buffer is created or not and where that buffer is scoped are decided via a complex series of rules.

It is important to note that in the absence of free references, there will never be any implicit expansion of record scopes. Thus the free reference is the cause of implicit scope expansion.

Implicit Scope Expansion Rules

The following are the rules for scope expansion (Progress does not document these rules, but they have been determined by experience). These rules all assume that all references refer to the same implicit buffer name. The previous rules for scoping are not repeated here as these rules only reflect the logic for implicitly expanding scope. To perform the analysis described here, one must traverse the Progress source AST that the parser creates with "forward" meaning down and/or right from the current node and "backward" meaning up and/or left of the current node. To understand some of the terms in the following rules, one must think of the tree as a list. In other words, one must "flatten" (degenerate) the tree into a simple list (based on the depth first approach of traversing down the tree before traversing right). Using this conceptual list form of the tree, one can see that the "closest prior" (the most recently encountered node that matches some criteria) or "next" (the closest downstream node that matches some criteria) node can be thought of as a simple backward or forward "search" respectively. This is critical to understand these rules since much of the logic is not defined by the nesting of blocks (as would be expected) but is rather defined by the "simple" ordering of the references.

The following are examples to show both the simple/expected behavior as well as each of the more obscure (counter-intuitive) cases. In each example the following explains how to interpret the text:

Nesting Scopes

Scopes can be nested although there are rules against nesting 2 scopes (referring to the same table) that are the same type. So 2 weak scopes for table1 can't be nested and 2 strong scopes of table1 can't be nested. A strong scope cannot be nested inside a weak scope for the same buffer. On the other hand, it is possible to nest a weak scope inside a strong scope where both are referencing the same table. Note that if a weak scope is nested inside a strong scope, that weak scope cannot be expanded by a free reference. The strong scope binds to the specified block and cannot (by definition) ever be expanded.

It is also known that where multiple scopes (to different tables) are active, the subset of unique field names (that are unique across those tables actively in scope) are added to the namespace and any common (non-unique across the active scopes) names are removed from the namespace. This means that as tables are added and removed from the active scope list, each such change can both add and remove from the namespace. Please see the field name lookup algorithm above for more details.

Record scopes create a schema namespace issue because while a record is in scope, unqualified field names resolve which would normally be ambiguous. This is complicated by the fact that the algorithm to decide upon the block to which a given buffer is scoped cannot be implemented in a single-pass parser.

The primary difference between these scopes seems to be in how the scopes get implicitly expanded based on other database references in containing blocks. Normally a scope is limited to the nearest block in which the scope was created. Strong scopes can be removed from namespace consideration as soon as the this block ends. Weak scopes and free references can both be expanded to the containing block depending on a complex set of conditions. Note that within the naturally scoped block, normally ambiguous unqualified field names are made unambiguous. This is straightforward to implement. The problem is that the parser must properly detect when the scope is implicitly expanded and know when to remove the scope. The parser cannot simply leave all weak and free reference scopes in scope permanently. This is because in a complex procedure, as scopes are removed, some names are removed but some names may also be added back! This is due to the fact that adding a scope while other scopes are still active will remove any field names that are in common between all active scopes. So, removing that scope, re-adds those removed names to the namespace. Since the namespace can expand when a scope is removed, it is critical to detect this exact point and implement this removal otherwise the parser will fail to lookup names that should not be ambiguous.

The current approach has been to use the SchemaDictionary namespace to keep track of scopes. At the start of every for, repeat or do block a scope is added and at the end, this scope is removed. This stack of scopes handles the nesting of names properly. Then the parser makes a very big simplifying assumption: every record (table, buffer, work-table or temp-table) reference is automatically "promoted" to the current scope (the top of the stack of scopes). This allows any tables in the current scope to take precedence for unqualified field lookups and then tables in the next scope down take precedence and so on until the name is resolved (remember: valid Progress code will ALWAYS have only unambiguous names so it is only a matter of where one finds it). The problem in this simplistic approach (see the record method) is that Progress may not always promote as we do. Thus in our implementation, there may be promoted tables that wouldn't otherwise be there. This may cause some names that would have been unambiguous (with a more limited set of tables in scope) to now be ambiguous. We don't have an example of such a situation but if it does occur, the implementation may have to get much more smart about when to and when not to promote.

To properly implement scoping support, these conditions must be fully explored and documented. The following list is an incomplete set of testcases which needs to be completed before scoping support can be implemented. In the table, each record that has the same scenario number (in the testcases that have more than 1 row) describes a database reference made inside that scenario. The order of the rows is the same order as used in the testcases. The column entitled "Disambiguates Outside Unqualified Field Name" can be interpreted as whether an unqualified field name can be used outside the block in which the scope was added (it can always be used inside such blocks). This is usually (but not always) just another way of interpreting the "Scoped-To Block" column, since it is the block which the scope is assigned that defines the location of where the scope exits (and thus where unqualified names can no longer be used). There are conditions (see scenarios 6 and 7 below) where the these columns show conflicting results. Note that the LISTING option on the COMPILE statement is used to create a listing file that showed exactly in which block Progress determined the scope to be assigned.

It is very important to note that there is only ever 1 buffer (memory allocation) in each given procedure (internal or external), function or trigger, even though there may be multiple buffer scopes. The scopes affect record-release but the same memory is used for storage of records read in all contained scopes AND Progress doesn't restore the state of the buffer when a nested scope is complete. This means that when a nested scope is added, the record that had been there is released at the end of that scope. Once that record is released, there is no record in the buffer. If there is downstream processing within the outer scope that expects the record to be there, it will find the record missing.

Unless a record is explicitly defined in an internal procedure, function or trigger, the buffer creation will occur at the external procedure level and this same buffer will be in use the entire scope of the external procedure. In the case where an internal procedure, function or trigger does have an explicit buffer defined, this results in namespace hiding and an additional (local) memory allocation. Buffer names can be the same with a buffer in the external procedure, but any explicit definition will create a local instance of that buffer. Nested scopes that operate on that buffer will have no affect on the buffer of the same name in an enclosing external procedure.

Multiple scopes are possible with explicitly defined buffers, just as they are with implicit buffers, even though there is only ever 1 memory allocation per procedure, trigger or function. The exception is for shared buffers that are imported (e.g. DEFINE SHARED BUFFER b FOR customer) rather than allocated locally. In this case, there is only ever a single scope for this buffer and that scope is set to the external procedure. Access to the shared buffer is imported once and is accessible for the lifetime of the procedure. In the cases of NEW SHARED and NEW GLOBAL SHARED buffers, these do cause a memory allocation and they can have multiple scopes just as any other explicit buffer or as the implicit buffers.

Buffer parameters (DEFINE PARAMETER BUFFER bufname FOR table for procedures and (BUFFER bufname FOR table) for functions) cause the resulting buffer:

All field data types are created as token types (there is an imaginary token range) for these. The lvalue rule pulls all possible matches in and has init logic to convert DB_SYMBOL and optionally other symbols (SYMBOL or any unreserved keyword) if they don't match a variable name. The token type is rewritten to one of the valid field types on a match.

There are corresponding rules to match tables (see record) and database names. These rewrite the token types as necessary. At this time, in those places (subrules) where indexes or sequences appear, we implement the symbol rule and after it returns we set the type to INDEX or SEQUENCE respectively.

The SymbolResolver class provides database/table/field symbol lookup against the SchemaDictionary class.

At this time, the following features are not yet implemented:

The following blocks exist in Progress 4GL:

The current parser implements block support for all of the above.

The following flowchart depicts the high level logical flow of the parser. It is this flow that recognizes and enables the block structuring of the resulting tree.

Please see the following for more details:

We handle all numeric data types the same since Progress 4GL "auto-casts" integers into decimals (and vice versa) as needed. Any mixed decimal and integer operations generate a decimal internally. If this is ultimately assigned to an integer, it appears that this conversion is not done until the assignment occurs, which would eliminate rounding errors during the expression evaluation. This seems to be true based on the set of testcases run at this time, but we will need to watch for any deviations from this in practice.

Assignments cannot be made inside an expression. If an lvalue is followed by the EQUALS token, the EQUALS is considered an assignment operator, otherwise the operator is considered a relational operator (equivalence).

The base date for Progress 4GL is 12/31/-4714.

Operator precedence is as follows (in ascending order from lowest to highest):

There are some special built-in functions like IF THEN ELSE which do not follow the generic function call format. For this reason they are implemented as an alternative in a primary expression (see ProgressParser primary-expr()). An example of this can be seen in the ProgressParser if_func() which is the implementation of the IF THEN ELSE.

The only data types that have literal representations are CHARACTER, INTEGER, DECIMAL, LOGICAL and DATE.

Generic support for widget attribute and method references has been added into the ProgressParser primary-expr() rule.

At this time, the P2J parser grammar does not implement operand type checking. This is not feasible to implement using the structure of the grammar itself, however it could be implemented via semantic predicates at some point in the future. Note that the base assumption of this project, that the input files are valid Progress 4GL code makes this somewhat unnecessary. To implement the checks later, use the following list of valid operator/operand combinations based on operand data type:

For more details, see:

Functions

Progress has a wide range of built-in functions. These functions

can support two features that user-defined functions cannot support:

A special return type FUNC_POLY has been created to identify those functions that can implement polymorphic return types.

The function support properly handles the forward declaration form of the FUNCTION statement. This adds the function to the namespace but does not include the defining block that is callable. The block structure of the program is properly maintained in both the forward and normal cases.

The following is the list of supported built-in functions.

ProgressParser function()

ProgressParser func_stmt()

ProgressParser func_call()

ProgressParser reserved_functions()

SymbolResolver lookupFunction()

ProgressParser lvalue()

ProgressParser reserved_variables()

SymbolResolver lookupVariable()

How to Add Keywords:

This interface provides the ability to handle the following:

For the purposes of the rest of this document, it is assumed that all AST nodes are of type AnnotatedAst, even when references as an AST.

For more details, see:

Aast

AnnotatedAst

This multi-step process must be handled in a very specific way and is complex enough that it is best done in a single location. The AstGenerator class provides this service. In addition to managing the generation process, it also provides caching/persistence and file output services for each step. For example, the preprocessor output can be cached in a file and reused upon future runs. The following output is possible:

For more details, see:

AstGenerator

ScanDriver

The AstPersister provides read and write persistence services. It is aware of how to persist an AnnotatedAst including all Aast specific data such as IDs and annotations. The resulting file is an XML file for which the grammar is known only in this class.

For more details, see:

AstPersister

To make such references unique across the project, a registry of files is used. Each file (Progress source or Java target) in the project gets a unique 32-bit integer ID. This ID can be converted into a filename found via the registry. In addition, each node in an AST gets a unique Long ID that is a combination of this file ID (in the upper 32-bits) and an AST-unique node ID (in the lower 32-bits). This long value is persisted into the XML file and is read back in each time the XML file is reloaded. This allows specific AST nodes to be referenced using a single Long value. The AST and the specific node in question can be found, loaded and accessed with nothing more than this Long ID.

The AstRegistry is the core of this implementation.

For more details, see:

AstRegistry

statement [STATEMENT] @0:0

if [KW_IF] @38:1

expression [EXPRESSION] @0:0

if [FUNC_POLY] @38:4

> [GT] @38:9

x [VAR_INT] @38:7

y [VAR_INT] @38:11

then [KW_THEN] @38:13

true [BOOL_TRUE] @38:18

else [KW_ELSE] @38:23

no [BOOL_FALSE] @38:28

then [KW_THEN] @38:31

inner block [INNER_BLOCK] @0:0

do [KW_DO] @39:1

block [BLOCK] @0:0

statement [STATEMENT] @0:0

message [KW_MSG] @40:4

expression [EXPRESSION] @0:0

"then part of if block" [STRING] @40:12

else [KW_ELSE] @42:1

statement [STATEMENT] @0:0

message [KW_MSG] @43:4

expression [EXPRESSION] @0:0

"else part of if block" [STRING] @43:12

Note that the indention level indicates the parent/child relationships. Each line is a different AST node and the file is ordered from the root node to the last child in a depth-first, left-to-right tree walk. The leftmost text on each line is the original text from the source file, the next text (in square brackets) is the symbolic name of the token type and the @x:y is a notation for line number and column number respectively (in the source file). Any entry that has a @0:0 is an artificially created token by the parser which was created to organize the tree for easier processing or searching.

For more details, see:

DumpTree

Call Tree Processing

The table is split into 3 types of entries: invocations, entry points and event processing exits. An invocation is mechanism by which an external linkage is called. An entry point is an exposed target for external linkage which is accessed via an invocation mechanism. An event processing exit is not an entry point and doesn't directly invoke another piece of code but they indirectly allow event code to be called by stopping the normal procedural flow and allowing queued events to be dispatched.

All entries listed as "external file linkage" refer to the fact that these linkages can invoke logic in or be invoked by a separate Progress procedure or a non-Progress executable (e.g. DLL, DDE, external command). This is different from the use of "external control flow" referenced above.

The list of root nodes is prepared manually based on the conversation with the customer and source code review.

There are two possible scenarios. In simple case customer does know all actually used root nodes (main modules) in the application. But for application with a long history of development this is unlikely. They may have unused files which may look like standalone utilities, abandoned ad-hoc procedures and utilities, development-time and test applications. All of them are redundant and should not be included in the list of root nodes. In this case other process is used.

The process of finding root nodes is iterative. Initially potential root nodes are found by review of application sources. The resulting list of root nodes is passed to the call graph generator and resulting call graphs and dead file list provide more information which allows to adjust list of root nodes which is again passed to the call graph generator. When list of root nodes is more or less sustained then it is discussed with the customer in order to determine which root nodes are actually necessary and which are not.

For more details, see:

In order to build the call tree graph, the analysis of the invocation of external modules (files) is performed. The analysis performed in the following way: starting from the the AST of the root node (file), each AST is loaded and scanned in order to determine which external files are invoked by the program. All found files are added into the appropriate node of the call graph as immediate child nodes. If any of these files are not processed, then they are added into the queue of the files which need to be processed. Then processing is repeated for all files in the queue.

Note that the methodology for this analysis is completely based on the list of "root nodes" or "primary entry points" to the application. If the the rootlist.xml (which defines these) is incorrect then the call graphs will be incorrect. The root list may include an entry point which is not actually used or is not part of the application being converted. In this "false positive" case, there will be call graphs generated that are not to be converted. The root list may also be missing an entry point that is used and if so then all files accessible only from such entry points will inappropriately appear in the dead file list.

The analysis of the AST is not as obvious as it may appear. The problem is hidden in the possibilities provided by the language and actively used in the application. In particular, the RUN statement allows calling a file whose name is stored in the variable or which is generated using any expression that returns a character value. The actual value of the variable can be calculated at run time or retrieved from the table. In some cases the variable is shared and assigned in a number of places through the entire application. Invocation is performed by assigning a value to the variable and calling another file which invokes the requested procedure. This case is most complicated because the actual RUN statement invocation and the procedure which is logically the caller are located in different source files.

Another difficult case is the dynamic writing of a Progress source file (at runtime) and then the subsequent invocation of the generated file. This itself is difficult to detect but is made more complicated by the fact that the generated file may call other application files.

Since some forms of external program invocations are not "direct", these expressions can (and usually do) reference runtime data or database fields, the filename often cannot be derived from inspection of the source code. In each instance of this, a hint for that source file is encoded to "patch" the call graph at these points. XML elements are prepared which contain possible values of the variable for each RUN VALUE() statement an associated program file. These hints are stored in a file named using the source filename (including full path) and then adding a ".hints" suffix. These values are then loaded during processing and handled as if they were collected from the AST. Hints files are prepared manually, as a result of analyzing application code, execution paths and real data extracted from the tables.

The call graph is stored as an XML file. The nesting of the XML elements shows the relationship of caller (parent) to called (child). The order of children is the order of the calls. At this time, the call graphs do not represent any duplicate calls or recursive calls. This means that only the first instance of any given file is represented in a call graph. No matter how many times a particular procedure is invoked it will only appear in the graph one time.

All nodes in the call graph are external procedures (Progress .p or .w source files) that are accessible from a particular root entry point. It only reflects the *external* files invoked, internal procedure/function linkage is not reflected. This is by design because these call graphs are essentially lists of ASTs to process (and the order in which the ASTs will be processed). An AST is equivalent to a file. Please note that due to the preprocessing approach of Progress, include files are not necessarily valid source files. All ASTs are created after preprocessing, so the contents of the include files are already embedded/expanded inline. While each file does have a record (a preprocessor hints file that is left behind as an output of the preprocessing step) of exactly which include files were referenced, no include file is represented directly in the call graph itself. Only source files (normally named .p or .w) are represented.

Although many applications make heavy use of execution of operating system specific external processes (e.g. shell scripts, native utility programs), this is not a consideration in terms of generating a call graph of accessible Progress 4GL procedures.

All duplication has been removed from the graph, which transforms the graph into a tree. This is done to make it easy to persist to XML, to make it easy to process and because once an AST is processed it doesn't need to be processed again. In other words, this simplification does not cause any limitations, it only makes things easier.

To create a call graph, the ASTs are walked starting at a root entry point and an in-memory graph is built. The processing dynamically takes into account any added graph nodes as they are added. So the graph continually grows until all ASTs that can be reached are included. The processing of each AST is performed by the Pattern Engine which applies specially designed rule set. The Pattern Engine is started by the specially designed driver CallGraphGenerator.

The rule set in combination with specially designed worker class performs the main part of the processing: it recognizes patterns in the ASTs which represent various invocation methods. The methods provided by the worker class are used to add files not the node and maintain list of ASTs which are processed and waiting for the processing. The rule set consist of two main parts: rules which recognize standard expressions and rules which recognize application-specific patterns. It must be noted that some statements are recognized but no actual processing (adding nodes) is performed. The purpose of these rules is to generate log entries which can be used to detect potential problems during further processing.

The worker class names CallGraphWorker serves following purposes:

The node with file name "./path/root.p" represents root node of the sample call graph. Nodes with file names "./path/referenced1.p" and "./path/referenced2.p" represent files directly called by the code in the "./path/root.p". Files "./path/referenced1_1.p" and "./path/referenced1_2.p" are directly called by "./path/referenced1.p" and so on.

The main Pattern Engine configuration for the call graph generator are located in the generate_call_graph.xml and in a set of .rules files which contain actual rules grouped by the purpose. The set of .rules files includes invocation_reports.rules, run_generated.rules, run_statements.rules and customer_specific_call_graph.rules. First three files contain common rules, while last one contains rules specific for the particular environment.

For more details, see:

If a file appears in any of the call graphs (.ast.graph files), it won't appear in the dead files list and vice versa. The dead files list also identifies include files that are completely unreferenced (in live code).

Any of the dead files may be referenced in other dead code somewhere (either a dead include file or a dead .p or .w file) but they are still inaccessible. At this time there may be (logically) inaccessible code in "live" files and at this time the call graph processing doesn't take such things into account. So if such code adds files to the call graph, they will be treated as "live".