Support #6679

H2 general performance tuning

100%

Related issues

History

#1 Updated by Alexandru Lungu almost 2 years ago

- Related to Support #4701: try to improve H2 transaction commit performance added

#2 Updated by Alexandru Lungu almost 2 years ago

- Related to Support #4702: write temp-table performance test cases added

#4 Updated by Alexandru Lungu almost 2 years ago

- Related to Bug #4057: h2 performance degradation added

#5 Updated by Dănuț Filimon almost 2 years ago

- File table_5.png added

- File table_4.png added

- File table_1-combined.png added

- File table_2-combined.png added

- File table_3.png added

Using the resources available in Performance Testing the H2 Database I have done tests using Apache JMeter and compared the following H2 versions: 1.4.200 patched, 2.0.204, 2.0.206, 2.1.210, 2.1.212, 2.1.214.

SincePageStore is no longer available in newer versions, the following change was made to the Database URL regarding the JMetertest files:

- Removed the option

mv_store=falsein the URL - Most of the tests would not work with this option set to false so I removed it and used

jdbc:h2:mem:_temp;db_close_delay=-1;query_cache_size=1024;instead.

In order to filter out the best choice among the newer versions, I ran the following tests once for each version: full_test_200, full_test_2000, heavy_insert_test_200, heavy_commit_test_200, heavy_delete_test_200, heavy_select_test_200, heavy_update_test_200.

The tests have 200 threads with 5/10 loops. The full tests have 200-2000 randomly generated statements, while the others have around 200 statements which include a predominant type of statement (insert, delete etc.). These tests are fully detailed in #4701-6.

The table on the left has the test results measured in seconds. From the results, we can see that the current version is certainly better than 204 and 206 versions, but performs poorly in comparison with 210 and above versions. The table on the right represents shows a performance percentage of each operation between 200 and the other versions.

As you can see, version 210 has the best performance improvements.

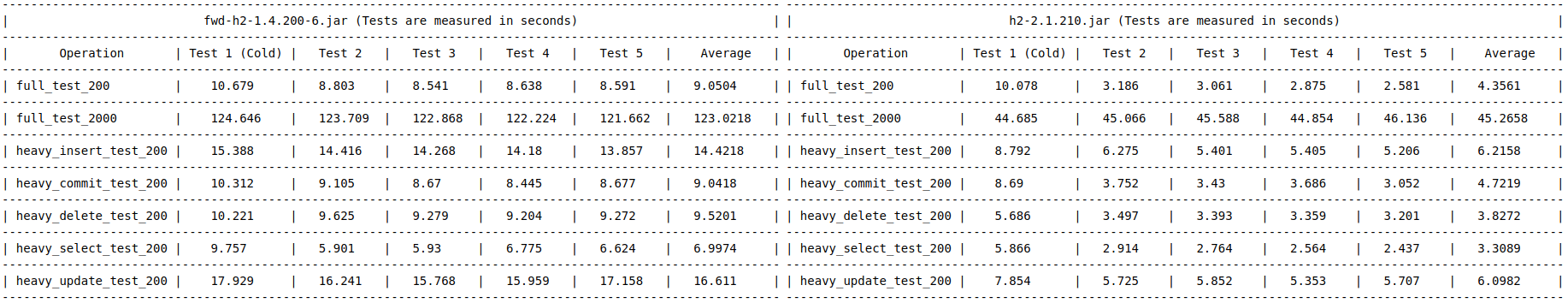

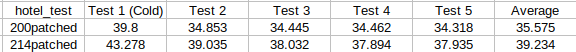

With the results obtained I decided to compare the 200 patched and 210 versions in detail and ran a total of 5 tests for each one. The results were the following:

Based on average, the percentage obtained was:

In comparison with the current version used, 210 is a better choice if we want to make an improvement.

I decided to take the patched H2 version that was available and apply most of the modifications to the 210 version. I also ran 5 tests with this version and obtained the following results:

The percentage obtained in the table below is also based on the average. It shows that the patched 210 version has a better performance.

#6 Updated by Greg Shah almost 2 years ago

- Start date deleted (

08/17/2022)

This sounds quite exciting.

Are the above comparisons using 1.4.200 patched in PageStore mode versus the later versions in mv_store mode?

#7 Updated by Eric Faulhaber almost 2 years ago

Greg Shah wrote:

This sounds quite exciting.

Are the above comparisons using 1.4.200 patched in PageStore mode versus the later versions in mv_store mode?

Yes, nice work!

It is encouraging that MVStore mode is improving, but we really need to know whether it has matched or surpassed the performance of the deprecated PageStore mode. We have not moved to a later version of H2 specifically because MVStore has consistently been slower than PageStore in the versions we've tested, and PageStore was dropped in later versions, as you note.

Specifically, I'd like to see a comparison between 1.4.200 patched in PageStore mode (what we run now) and 2.1.210 (and later) patched in MVStore mode (i.e., the only mode offered).

#8 Updated by Eric Faulhaber almost 2 years ago

Dănuț Filimon wrote:

The tests have 200 threads with 5/10 loops. The full tests have 200-2000 randomly generated statements, while the others have around 200 statements which include a predominant type of statement (insert, delete etc.). These tests are fully detailed in #4701-6.

I know you did not write the tests, so this is a question more for Alexandru: are the randomly generated statements generated at runtime, or were they randomly generated once and immortalized in the tests? If they are randomly generated at runtime, it seems the timing of the test runs would not necessarily be comparable for performance measurement purposes, since the statements could be executing entirely different operations across test runs. I would think we would need to execute exactly the same operations across test runs for the results to be comparable.

#9 Updated by Dănuț Filimon almost 2 years ago

- File table_patch_comparison.png added

Greg Shah wrote:

Are the above comparisons using 1.4.200 patched in PageStore mode versus the later versions in mv_store mode?

Yes, the tests for 1.4.200 patched use PageStore and the later versions use MVStore.

Eric Faulhaber wrote:

I'd like to see a comparison between 1.4.200 patched in PageStore mode (what we run now) and 2.1.210 (and later) patched in MVStore mode (i.e., the only mode offered).

Here is a comparison between the two patched versions using the same results obtained in #6679-5.

Eric Faulhaber wrote:

are the randomly generated statements generated at runtime, or were they randomly generated once and immortalized in the tests?

Tests are not generated at runtime. A python script was used to generate the statements for each operation beforehand, meaning that all the tests were the same when testing different versions.

#10 Updated by Greg Shah almost 2 years ago

Yes, the tests for 1.4.200 patched use PageStore and the later versions use MVStore.

Fantastic! I think it is fair to say we want to get 210patched into 3821c ASAP.

Eric: What do you need from a testing perspective before we can do that?

#11 Updated by Dănuț Filimon almost 2 years ago

I tested version 210 patched with the Hotel_GUI application and there were no major problems, except a syntax difference for retrieving the nextval of a sequence. I identified the problem and I'll fix it.

#12 Updated by Eric Faulhaber almost 2 years ago

Just curious: do the tests use a lot of transactions? This is where we have seen a serious bottleneck in the past.

#13 Updated by Alexandru Lungu almost 2 years ago

Around 20 transactions are done by one user per iteration. There are 200 users per tests (except one test with 10 users). Each user does 5 iterations. So I guess 200 * 5 * 20 = 20.000 transactions. The tests are done with auto-commit false and transaction isolation DEFAULT. In the private (one user per database) mode, I don't think we benefit much from transaction isolation. Maybe we can also work on this (unless a single logical 4GL user can determine multiple parallel transactions on the database).

#14 Updated by Eric Faulhaber almost 2 years ago

- Assignee set to Dănuț Filimon

- Tracker changed from Bug to Support

- Status changed from New to WIP

The fact that there are so many transactions being executed in the tests actually is encouraging to me. Since transaction processing has proven to be a bottleneck in the past, the fact that these transaction-heavy tests are running so much faster with v210 suggests the H2 developers may have done some work in this area. We know that our "real world" use of H2 stresses the transaction code in H2, so this further suggests that we might see some real benefit from this improvement in FWD. This is speculative and perhaps premature on my part, but it is encouraging.

OTOH, I am a bit concerned that the performance drops off again in versions >210. Does the H2 history log suggest that perhaps some aggressive/experimental performance improvements made their way into v210, but had to be adjusted in later versions to fix regressions? We want to be careful about adopting a version that improves performance at the cost of stability.

Of course, the way to address both of these speculations on my part is with testing...

#15 Updated by Eric Faulhaber almost 2 years ago

- Assignee deleted (

Dănuț Filimon)

Alexandru, I'll leave it to you to set the assignee. If both gentlemen are working on this task, you can leave it blank or assign it to yourself.

#16 Updated by Alexandru Lungu almost 2 years ago

OTOH, I am a bit concerned that the performance drops off again in versions >210. Does the H2 history log suggest that perhaps some aggressive/experimental performance improvements made their way into v210, but had to be adjusted in later versions to fix regressions? We want to be careful about adopting a version that improves performance at the cost of stability.

This is my concern too. However, in the past testing iterations of H2, most of the errors were identified right when the profiling was done or Hotel GUI was run. I will stress the new patched v210 H2 artifact against some larger customer projects (until Danut and Radu will have the chance to set up some larger projects on their own stations). I think it is best to:

- extract some SQL from scenarios used in larger projects. Run these in JMeter (private and shared), many users, many iterations. This can give us an insight into the performance increase and stability on real scenarios. There are already some insights in #4701 on how to do that.

- identify some existing resourceful programs in testcases which can help us find regressions. I will share my new testcases done for #6582 (they can come in handy).

- check the adjustments done for v212 and v214. Make a "shorterlist" of the issues which really concerns FWD. These are regression fixes or complex features. Most of the fixes are not quite irrelevant: 4 about persistent H2 regarding

DEFRAG, 6 about compatibility modes which aren't used in FWD and 9 about complex use cases not used right now in FWD (JSON values, SCRIPT command, GEOMETRY values, SYSDATE variable, MERGE statement). However, I am mostly concerned about the following:- v2.1.212: DROP TABLE/INDEX causes memory leak

- v2.1.212: H2 2.1.210: Query with Parameters throws NPE

- v2.1.212: Occasional NPE in concurrent update of LOB

- v2.1.212: Parser can't parse REFERENCES … NOT NULL

- v2.1.212: OOME with nested derived tables

- v2.1.212: Regression: ORDER BY ROWNUM fails with General error: "Unexpected code path"

- v2.1.214: Subquery has incorrect empty parameters since 2.1.210 that breaks sameResultAsLast()

- v2.1.214: LOB issue

- v2.1.214: Conversion 'text' to 'integer' doesn't work anymore : CHARACTER VARYING since H2 2.0 can hold only up to 1,048,576 characters, that's why text was remapped to CHARACTER LARGE OBJECT

#17 Updated by Dănuț Filimon almost 2 years ago

- File table_nextval.png added

Regarding the syntax difference about nextval in #6679-11.

Since we can't use .nextval in version 2.1.210 of H2, I profiled the other 2 functions for getting the next value of a sequence: NEXTVAL() and NEXT VALUE FOR.

I created 2 test (next_value_for_test_10k, nextval_test_10k), each running 5 iterations of 10000 statements on 10 sequences in a single thread. The results (in seconds) were the following:

The statements for the tests are not generated at runtime.

I can provide the .jmx files if necessary.

#18 Updated by Eric Faulhaber almost 2 years ago

Dănuț Filimon wrote:

Regarding the syntax difference about nextval in #6679-11.

Since we can't use

.nextvalin version 2.1.210 of H2, I profiled the other 2 functions for getting the next value of a sequence:NEXTVAL()andNEXT VALUE FOR.I created 2 test (next_value_for_test_10k, nextval_test_10k), each running 5 iterations of 10000 statements on 10 sequences in a single thread. The results (in seconds) were the following:

The statements for the tests are not generated at runtime.

I can provide the.jmxfiles if necessary.

What is currently calling .nextval? P2JH2Dialect.getSequenceNextValString() is implemented as follows:

/**

* Generate the appropriate select statement to retrieve the next value of a sequence.

*

* @param sequenceName

* The name of the sequence to be queried.

*

* @return Dialect-specific string to query the sequence, or {@code null} if not supported.

*/

@Override

public String getSequenceNextValString(String sequenceName)

{

return "call next value for " + sequenceName;

}

Your test results suggest it would be better implemented as:

/**

* Generate the appropriate select statement to retrieve the next value of a sequence.

*

* @param sequenceName

* The name of the sequence to be queried.

*

* @return Dialect-specific string to query the sequence, or {@code null} if not supported.

*/

@Override

public String getSequenceNextValString(String sequenceName)

{

return "nextval(" + sequenceName + ")";

}

Is the latter syntax not supported in the version of H2 we currently use?

#19 Updated by Dănuț Filimon almost 2 years ago

Eric Faulhaber wrote:

What is currently calling .nextval?

P2JH2Dialect.getSequencePrefetchString(), FastCopyHelper.getPkMappingSql() and FastCopyHelper.getSrcTempColumns() currently use .nextval, which is available in the current version of H2 that we use.

P2JH2Dialect.getSequencePrefetchString() is implemented as follows:

@Override public String getSequencePrefetchString(String sequenceName) { return "select " + sequenceName + ".nextval from system_range(?, ?)"; }

In version 2.1.210, the method above will cause an error since .nextval was removed. The solution would be to use next value for or nextval() which are still available.

The tests in #6679-17 show that nextval() takes less time than next value for to execute, but I would not suggest to use it because the H2 documentation for NEXTVAL states thatnextval() method exists only for compatibility, it's recommended to use the standard NEXT VALUE FOR sequenceName instead.

Is the latter syntax not supported in the version of H2 we currently use?

Both next value for and nextval() are supported in the version that we currently use. I will change FWD to use next value for.

#20 Updated by Dănuț Filimon almost 2 years ago

Committed 3821c/rev.14187 where I replaced .nextvalue with next value for in order to prepare for the 2.1.210 H2 version.

next value for also works for the current version of H2.

#21 Updated by Dănuț Filimon almost 2 years ago

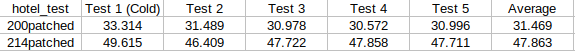

- File table_hotel_gui_comparison.png added

I ran a test on the sql statements obtained from the Hotel GUI application.

The prepared statements obtained from the application caused a problem while trying to test the set of statements in Apache JMeter for 210patched (but not in 200patched). I created a script to translate the prepared statements into regular statements and tested the current version against 210patched.

The hotel_gui_test has 10 threads with 10 loops and consists of 2200 statements with 1050 being transactions. The results (in seconds) were the following:

#22 Updated by Eric Faulhaber almost 2 years ago

Dănuț Filimon wrote:

I ran a test on the sql statements obtained from the Hotel GUI application.

The prepared statements obtained from the application caused a problem while trying to test the set of statements in Apache JMeter for 210patched (but not in 200patched). I created a script to translate the prepared statements into regular statements and tested the current version against 210patched.

Some questions, so I'm clear on the methodology:

- The statements under test ultimately were the same for 200patched and 210patched, correct?

- 200patched was run in PageStore mode, correct?

#23 Updated by Radu Apetrii almost 2 years ago

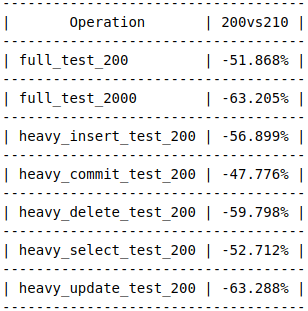

- File table_SQL_4GL_FWD.png added

- File table_SQL_4GL_FWD_methods.png added

- The time is calculated in milliseconds.

- For testing in 4GL I have used the

etimemethod. For testing SQL I have used JMeter. For testing in P2J I have used the convertedetimemethod from 4GL. - The H2 version that I have used is 2.1.210 patched.

- I also commited in testcases project rev. 2364 containing the tests I used.

I have used 3 temporary tables, each one containing 2 columns. The first table had no index, the second one had an index on the first column, and the third one had an index on both columns.

The tests were as follows:

- Create: there were 10000 create statements for each table.

- Update: there were 3 sets of 10000 update statements for each table. The first set contained no sorting statement, the second set contained a sorting statement on the first column of each table and the last set contained a sorting statement on both columns.

- Find: there were 3 sets of 10000 find statements for each table. The first set had a simple loop to iterate through all the records of the tables, the second set contained the keywords

for eachand the last set contained the keywordpreselect. - Delete: there were 10000 delete statements for each table.

- The Update test is the most concerning one, not only because the total time is the highest of all, but also because the SQL occupies around 21.74% of the P2J program's execution time.

- The Find test works really well with an average of around 0.3 seconds.

- The SQL of the Delete test is quite slow, but the fact that it occupies around 95.99% of the P2J program's execution time is a positive.

Mainly, I have noticed some worrying results concerning the tables with one or more indexes. Here's the methods that take considerable amount of time to execute:

#24 Updated by Dănuț Filimon almost 2 years ago

Committed fwd-h2/rev.7 containing version 2.1.210 without any patches from version 1.4.200.

Committed fwd-h2/rev.8 where I applied the patches from version 1.4.200 to version 2.1.210.

Some important notes:- PageStore is not available anymore,

mv_store=falseshould be removed from any configuration - In

p2j.persist.hql.DataTypeHelper,TimestampWithTimeZone.classdoesn't exit in version 2.1.210, I believe that the equivalent isValueTimeTimeZone.class - Usage of the keyword

INTERSECTSwas removed before 2.1.210 was released.

#25 Updated by Dănuț Filimon almost 2 years ago

Eric Faulhaber wrote:

The statements under test ultimately were the same for 200patched and 210patched, correct?

Yes, the same statements were used for 200patched and 210patched. The only difference is that the test was using prepared statements in 200patched and normal statements in 210patched.

200patched was run in PageStore mode, correct?

Yes.

I should mention that I used private databases for each user by omitting the database name: jdbc:h2:mem:;

#26 Updated by Eric Faulhaber almost 2 years ago

Thank you for all the research and testing. In all the work so far...

- Have you discovered any cases where H2 v2.1.210patched was slower than v1.4.200patched (PageStore mode)?

- Have you discovered any regressions using v2.1.210patched with FWD and converted 4GL code, compared to v1.4.200patched (PageStore mode)?

- Are there any other concerns with committing v2.1.210patched to branch 3821c for wider testing?

If the answer to all of the above questions is, "no", then let's update 3821c to use v1.4.200patched. This will involve:

- Committing all breaking code changes needed to support v2.1.210patched (i.e., changes which cannot work with v1.4.200patched), plus a gradle build script dependency update to the same 3821c revision. I think Alexandru knows what to do for the gradle changes, but let me know if not.

- Adding the updated H2 jar file to our artifacts area (so the correct version can be pulled by the gradle build). I can do this part when we are ready. I will just need the final H2 jar file.

#27 Updated by Dănuț Filimon almost 2 years ago

Eric Faulhaber wrote:

Have you discovered any cases where H2 v2.1.210patched was slower than v1.4.200patched (PageStore mode)?

I am still testing the two versions and currently have an issue with the profiling of some sets of statements. I found a way to do H2 profiling using an external sql file. This kind of profiling is inconsistent with my previous findings and I need to redo some of my previous tests.

The tests provided in Performance_Testing_the_H2_Database may not be accurate.

Have you discovered any regressions using v2.1.210patched with FWD and converted 4GL code, compared to v1.4.200patched (PageStore mode)?

I did not discover any regression.

Are there any other concerns with committing v2.1.210patched to branch 3821c for wider testing?

Apart from the ones mentioned in #6679-24, I need to mention that H2 2.1.210 has new keywords that are not specified in our application (eq: ANY, BETWEEN etc.).

These new keywords can be accessed here: https://www.h2database.com/html/advanced.html?highlight=keyword&search=keyword#keywords

Committed fwd-h2/rev.9. I applied a few changes from versions 2.1.212 and 2.1.214.

#28 Updated by Eric Faulhaber almost 2 years ago

Dănuț Filimon wrote:

Eric Faulhaber wrote:

Have you discovered any cases where H2 v2.1.210patched was slower than v1.4.200patched (PageStore mode)?

I am still testing the two versions and currently have an issue with the profiling of some sets of statements. I found a way to do H2 profiling using an external sql file. This kind of profiling is inconsistent with my previous findings and I need to redo some of my previous tests.

The tests provided in Performance_Testing_the_H2_Database may not be accurate.

Please explain when you have a better idea. We don't want to roll out v2.1.210 if it is likely to make performance worse for our use patterns. Thank you.

Have you discovered any regressions using v2.1.210patched with FWD and converted 4GL code, compared to v1.4.200patched (PageStore mode)?

I did not discover any regression.

Are there any other concerns with committing v2.1.210patched to branch 3821c for wider testing?

Apart from the ones mentioned in #6679-24, I need to mention that H2 2.1.210 has new keywords that are not specified in our application (eq: ANY, BETWEEN etc.).

These new keywords can be accessed here: https://www.h2database.com/html/advanced.html?highlight=keyword&search=keyword#keywords

Please add any new keywords to the list returned by P2JH2Dialect.getReservedKeywords().

Committed fwd-h2/rev.9. I applied a few changes from versions 2.1.212 and 2.1.214.

What does this mean?

#29 Updated by Dănuț Filimon almost 2 years ago

Eric Faulhaber wrote:

Dănuț Filimon wrote:

Committed fwd-h2/rev.9. I applied a few changes from versions 2.1.212 and 2.1.214.

What does this mean?

fwd-h2 is our current patched H2 branch. I added revision 7 (H2 2.1.210 version), 8 (Moved patches of the current version to 2.1.210) and 9 (major fixes from versions 2.1.212 and 2.1.214, h2database/issues/3414 and h2database/issues/3434). We can revert anytime to the previous version if things don't go as expected with 2.1.210.

#30 Updated by Eric Faulhaber almost 2 years ago

Dănuț Filimon wrote:

Eric Faulhaber wrote:

Dănuț Filimon wrote:

Committed fwd-h2/rev.9. I applied a few changes from versions 2.1.212 and 2.1.214.

What does this mean?

fwd-h2 is our current patched H2 branch. I added revision 7 (H2 2.1.210 version),

Good.

8 (Moved patches of the current version to 2.1.210)

Good.

and 9 (major fixes from versions 2.1.212 and 2.1.214, h2database/issues/3414 and h2database/issues/3434).

I don't really want to be in the business of backporting fixes from one version of H2 to another, since every deviation/patch we carry requires effort whenever we consider a newer version of H2. However, I agree that these two fixes seem pretty critical, so let's leave them in our H2 v9 for now. Did you hit these bugs in your testing of v2.1.210?

It is also quite important that we get clarity on the following, as we need to make a go/no-go decision on v2.1.210 as soon as possible:

I found a way to do H2 profiling using an external sql file. This kind of profiling is inconsistent with my previous findings and I need to redo some of my previous tests.

The tests provided in Performance_Testing_the_H2_Database may not be accurate.

Thank you.

#31 Updated by Alexandru Lungu almost 2 years ago

The tests from our current Performance_Testing_the_H2_Database are decent for 1.4.200, but are no quite accurate for 2.1.210 for the following reason:

- a JMX from our current performance testing guide does a single fat Update Statement (with ~200 statements) for each user, several times. Basically, it tests the performance of "batch prepared statements" (if this makes sense - check below for terminology). The good side is that 1.4.200 allows such "batch" testing - it automatically parses and individually executes each statement. The downside is that latency is seriously taken into account - sending nearly 200kB of statements to 20 users several times spoils the testing. Also the manipulation/parsing/preparing of such chunks is not quite efficient.

- "batch prepared statements" can't be properly used with 2.1.210. This version doesn't allow the execution of more than one prepared statement at a time. However, using an external SQL file does the trick, as Apache JMeter runs the prepared statements "one-by-one". Doing such thing severely reduces the execution time (7/8 times faster) as H2 doesn't deal with 200kB of bulk statements, but with atomic, easy to parse, cachable light statements.

By "batched prepared statements" I mean insert into tt(f1) values(?) {1: 2}; select * from tt where f1 = ?; {1: 2}. By "batched statements" I mean insert into tt(f1) values(2); select * from tt where f1 = 2;. By "one-by-one profiling" I mean executing a single (prepared) statement per JDBC request. One-by-one profiler is the closest to our use-case.

- #6679-5 doesn't use "batched prepared statements", but simply "batched statements". The results there tells us that 2.1.210 is way more powerful when dealing with batched statements: the parsing and the big string manipulation are better. However, this is not really close to our use-case with H2 (although fast parsing is something we tried to optimize in the past). These tests should be redone to use "one-by-one" profiling.

- #6679-17 seems to be related to the deprecated

nextval. It is out of scope here. - #6679-21 uses "batched prepared statements" for 1.4.200 and "batch statements" for 2.1.210. The test may be inconsistent as 1.4.200 should also do the preparing and extra parsing, delaying the execution. This test may be interesting if it is run with one-by-one profiler!

- #6679-23 uses

etimeand is not related to 1.4.200 vs 2.1.210

I will update Performance_Testing_the_H2_Database to include this information about "batch prepared statements" and the fact that this is an adequate way of testing only for <= 1.4.200 versions. I will let Danut do an update about external SQL file profiling ("one-by-one").

To make a proper go/no-go decision I suggest:- retest 2.1.210 (and eventually 2.1.212 and 2.1.214) with one-by-one strategy. Check if we still have the same preliminary time improvement. Having some promising results motivates us to continue testing.

- retest the Hotel GUI examples from #6679-21. Check if we have regressions with prepared statements on 2.1.210. Also compare to H2 1.4.200. Having a stable version is a must, so the Hotel GUI test is critical. I did some testing with 2.1.210 on some bigger customer applications and there are no straight-forward regressions (except the lack of defined keywords for H2).

- Do the same

etimetests from #6679-23, but with both H2 versions. This should be the decisive part as it directly makes use of FWD persistence. Having a >20% time improvement here should be a go. - Even if we don't succeed in integrating 2.1.210, it is important to document the findings in Performance_Testing_the_H2_Database. Having an accurate profiling suite for >= 2.1.210 will save us time in the future.

#32 Updated by Eric Faulhaber almost 2 years ago

Alexandru Lungu wrote:

[...]

Thank you for the explanation.

I will update Performance_Testing_the_H2_Database to include this information about "batch prepared statements" and the fact that this is an adequate way of testing only for <= 1.4.200 versions. I will let Danut do an update about external SQL file profiling ("one-by-one").

To make a proper go/no-go decision I suggest:

- retest 2.1.210 (and eventually 2.1.212 and 2.1.214) with one-by-one strategy. Check if we still have the same preliminary time improvement. Having some promising results motivates us to continue testing.

Agreed.

- retest the Hotel GUI examples from #6679-21. Check if we have regressions with prepared statements on 2.1.210. Also compare to H2 1.4.200. Having a stable version is a must, so the Hotel GUI test is critical. I did some testing with 2.1.210 on some bigger customer applications and there are no straight-forward regressions (except the lack of defined keywords for H2).

Agreed.

- Do the same

etimetests from #6679-23, but with both H2 versions. This should be the decisive part as it directly makes use of FWD persistence. Having a >20% time improvement here should be a go.

Agreed, except I would say any measurable improvement that is statistically significant should be a go, assuming the data from the other points is conclusive and we have no known regressions.

- Even if we don't succeed in integrating 2.1.210, it is important to document the findings in Performance_Testing_the_H2_Database. Having an accurate profiling suite for >= 2.1.210 will save us time in the future.

Agreed, but let's hope we see an improvement.

How long do you think it will take to implement this revised plan? We need to get any updates which improve performance into customers' hands as soon as possible.

#33 Updated by Dănuț Filimon almost 2 years ago

- File table_one_by_one.png added

Alexandru Lungu wrote:

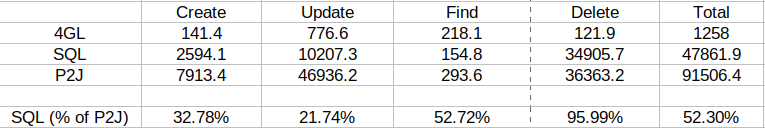

retest 2.1.210 (and eventually 2.1.212 and 2.1.214) with one-by-one strategy. Check if we still have the same preliminary time improvement. Having some promising results motivates us to continue testing.

I created a new set of tests for Apache JMeter, I tested versions 200patched, 210patched, 212 and 214 and calculated the percentages between 200patched and the other versions.

Each tests consists of 5000 statements (with 250 initial insert statements) made on a temporary table with 10 fields:- full_test, heavy_commit, heavy_delete and heavy_update have 10 users and 5 iterations

- heavy_insert has 5 users and 2 iterations

- heavy_select has 5 users and 5 iterations

The percentages obtained after comparing the 200patched version with the other 3 versions are:

There is an improvement when deleting, selecting and updating data in each version and there isn't a significant difference between 210, 212 and 214 when it comes to performance.

retest the Hotel GUI examples from #6679-21. Check if we have regressions with prepared statements on 2.1.210. Also compare to H2 1.4.200. Having a stable version is a must, so the Hotel GUI test is critical. I did some testing with 2.1.210 on some bigger customer applications and there are no straight-forward regressions (except the lack of defined keywords for H2).

While testing Hotel GUI I get the following error when using version 2.1.210 patched with a shared database: org.h2.jdbc.JdbcSQLTimeoutException: Timeout trying to lock table "SYS"; SQL statement. This error doesn't appear when using 1.4.200 patched. I did the same test using version 2.1.214 (not patched) and I get the same error. This error appeared in version 1.4.200 (not patched), most probably some extra patches will fix this problem.

Do the same

etimetests from #6679-23, but with both H2 versions. This should be the decisive part as it directly makes use of FWD persistence. Having a >20% time improvement here should be a go.

These tests can be done without extra patching.

Eric Faulhaber wrote:

How long do you think it will take to implement this revised plan?

I think in 1-2 days we can make a decision regarding the version we want to update to.

#34 Updated by Eric Faulhaber almost 2 years ago

Some things to note for your testing:

- We do not use persistent tables with H2 for customer applications. All use of H2 in these environments is with temporary tables, in an embedded, in-memory database. This is the critical environment to test. We only use a standalone H2 database with persistent tables for light, internal testing, or to allow a simple setup for sample/demo code, like the hotel examples.

- Although FWD allows switching between private and shared H2 databases for its legacy (4GL) temp-table support, in practice, we only use private databases.

It seems from your latest results that there is potentially still a decent benefit from moving to a newer version (probably 214), though we want to ensure our patches (if still relevant with newer versions) are applied, and that we see those tests executed using temporary tables in an embedded, in-memory database as well, if that was not done up until now. Thank you.

#35 Updated by Eric Faulhaber almost 2 years ago

I'm trying to use H2 v214 to see if it still shows a memory leak I see when running a converted application with v200. However, upon simply replacing the patched v200 jar with the unpatched v214 downloaded from the H2 website, I get this on server startup:

com.goldencode.p2j.cfg.ConfigurationException: Initialization failure

at com.goldencode.p2j.main.StandardServer.hookInitialize(StandardServer.java:2181)

at com.goldencode.p2j.main.StandardServer.bootstrap(StandardServer.java:1055)

at com.goldencode.p2j.main.ServerDriver.start(ServerDriver.java:499)

at com.goldencode.p2j.main.CommonDriver.process(CommonDriver.java:518)

at com.goldencode.p2j.main.ServerDriver.process(ServerDriver.java:217)

at com.goldencode.p2j.main.ServerDriver.main(ServerDriver.java:874)

Caused by: java.lang.NoClassDefFoundError: org/h2/api/TimestampWithTimeZone

at com.goldencode.p2j.persist.hql.DataTypeHelper.initJavaClasses(DataTypeHelper.java:503)

at com.goldencode.p2j.persist.hql.DataTypeHelper.initialize(DataTypeHelper.java:162)

at com.goldencode.p2j.persist.hql.DataTypeHelper.<clinit>(DataTypeHelper.java:144)

at com.goldencode.p2j.persist.Persistence.initialize(Persistence.java:847)

at com.goldencode.p2j.main.StandardServer$10.initialize(StandardServer.java:1300)

at com.goldencode.p2j.main.StandardServer.hookInitialize(StandardServer.java:2177)

... 5 more

Caused by: java.lang.ClassNotFoundException: org.h2.api.TimestampWithTimeZone

at java.net.URLClassLoader.findClass(URLClassLoader.java:387)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:352)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

... 11 more

Is this something which is fixed with the patched v214? If so, can you please get the patched H2 v214 jar file to me? I don't need to wait for the results of your other testing, as I'm just replacing v200 temporarily for my specific task. Thank you.

#36 Updated by Dănuț Filimon almost 2 years ago

Eric Faulhaber wrote:

Is this something which is fixed with the patched v214?

No, this is related to the FWD configuration strictly. Please check #6679-24.

I am creating a patched 214 today for testing.

#37 Updated by Dănuț Filimon almost 2 years ago

- File h2-2.1.214-patched.jar added

- File table_hotel_test_200vs214.png added

- File table_etime_test.png added

- File patch_2.1.214.patch

added

added

Alexandru Lungu wrote:

retest the Hotel GUI examples from #6679-21. Check if we have regressions with prepared statements on 2.1.210. Also compare to H2 1.4.200. Having a stable version is a must, so the Hotel GUI test is critical. I did some testing with 2.1.210 on some bigger customer applications and there are no straight-forward regressions (except the lack of defined keywords for H2).

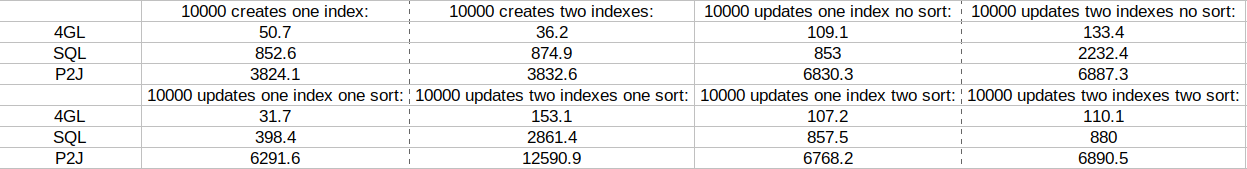

Considering the results in #6679-33, I applied the patches from version 1.4.200 to 2.1.214 and tested the two versions with statements from the Hotel GUI. The test consisted in 6000 statements with 25 users and 25 iterations and the results (in seconds) were:

As shown in the table above, version 1.4.200patched has a better performance than 2.1.214patched.

Do the same

etimetests from #6679-23, but with both H2 versions. This should be the decisive part as it directly makes use of FWD persistence. Having a >20% time improvement here should be a go.

I did 5 tests for each version, 1.4.200patched and 2.1.214patched, and calculated the average result from the converted application in milliseconds:

This test also explains the results of the Hotel GUI test.

Note that the tests in #6679-23 were done using version 2.1.210patched and the results obtained from the P2J program are very different in comparison with version 1.4.200patched.

Eric Faulhaber wrote:

can you please get the patched H2 v214 jar file to me?

I attached a .patch file to this thread with the necessary modifications to use version 2.1.214 and the .jar file with the patches of version 1.4.200.

#38 Updated by Eric Faulhaber almost 2 years ago

Dănuț Filimon wrote:

Alexandru Lungu wrote:

retest the Hotel GUI examples from #6679-21. Check if we have regressions with prepared statements on 2.1.210. Also compare to H2 1.4.200. Having a stable version is a must, so the Hotel GUI test is critical. I did some testing with 2.1.210 on some bigger customer applications and there are no straight-forward regressions (except the lack of defined keywords for H2).

Considering the results in #6679-33, I applied the patches from version 1.4.200 to 2.1.214 and tested the two versions with statements from the Hotel GUI. The test consisted in 6000 statements with 25 users and 25 iterations and the results (in seconds) were:

As shown in the table above, version 1.4.200patched has a better performance than 2.1.214patched.

Do the same

etimetests from #6679-23, but with both H2 versions. This should be the decisive part as it directly makes use of FWD persistence. Having a >20% time improvement here should be a go.I did 5 tests for each version, 1.4.200patched and 2.1.214patched, and calculated the average result from the converted application in milliseconds:

This test also explains the results of the Hotel GUI test.

Note that the tests in #6679-23 were done using version 2.1.210patched and the results obtained from the P2J program are very different in comparison with version 1.4.200patched.

If I'm understanding your results correctly, 2.1.214patched is actually much slower in most operations than the version we are using now, when compared in a way that does not leverage the newer version's more efficient batching capabilities (which we do not leverage in our use patterns), when simulating 25 concurrent users. Please tell me if I'm misunderstanding your findings.

Note that I'm basing this on your test description for the top table; I don't know how the tests were performed for the bottom table, which actually is the more concerning set of results. Were these performed single-user, manually running converted 4GL programs, or were they automated and simulating multiple concurrent users?

The following assumes multiple users...

Can you discern where 2.1.214patched is spending its time in these tests? Based on your description of the test conditions, at least for the top table (6,000 statements, 25 concurrent users, 25 iterations), this approach will test scalability. This is good to know, but first we want to understand the performance of the operations themselves, not at scale. If the synchronization within H2 is poor, the overall time with 25 concurrent users can suggest a very slow result, even if each individual statement is executed quickly.

I mention this because this is what we found with older versions of H2, and it was the reason behind some of our patches. It has been a while since I reviewed those patches, but if they were just improving synchronization in the PageStore engine, there may still be poor synchronization in the MvStore engine which is skewing these results. If you can understand where the time is being spent, and if you find it is mostly spent waiting on synchronized resources, there may be something we can do within the MvStore engine to improve this, as we did previously in the PageStore engine.

A good start to understanding this would be to measure the results with only a single user (i.e., 6,000 statements, 1 user, 25 iterations). In other words, measure the performance without forcing synchronization across multiple users. If 2.1.214patched performs well in that comparison, then it is worth delving deeper and seeing if poor synchronization is the root of the problem in the multi-user tests. OTOH, if 2.1.214patched is slower in a single-user comparison, the upgrade is probably a no-go.

If the bottom table reflects the results of single-user testing, then it looks like we already have our answer: with 2.1.214patched that much slower in a single-user scenario running actual converted code, it seems the upgrade would be a no-go.

#39 Updated by Dănuț Filimon almost 2 years ago

- File table_hotel_retest_200vs214.png added

Eric Faulhaber wrote:

I don't know how the tests were performed for the bottom table, which actually is the more concerning set of results. Were these performed single-user, manually running converted 4GL programs, or were they automated and simulating multiple concurrent users?

If the bottom table reflects the results of single-user testing, then it looks like we already have our answer: with 2.1.214patched that much slower in a single-user scenario running actual converted code, it seems the upgrade would be a no-go.

The results in the bottom table from #6679-37 were obtained by manually running a converted 4GL program with a private database, in memory (a single-user test) and by using the etime method.

A good start to understanding this would be to measure the results with only a single user (i.e., 6,000 statements, 1 user, 25 iterations). In other words, measure the performance without forcing synchronization across multiple users.

I retested hotel with your suggested approach: 6000 statements, 1 user and 250 iterations (I increased the number of iterations in order to obtain a clear difference between the two versions).

The results were similar to the ones I obtained previously:

#40 Updated by Greg Shah almost 2 years ago

Clearly, we cannot move ahead with the new version of H2. At least we cannot without something that changes the performance profile for our use cases. It is probably worth investigating the source(s) of the difference. If there is something we can do to make the performance at least as good, then we could move to the later version and have more future options. Being stuck at the old unsupported version is not a good long term plan.

I'm also wondering about the transaction processing overhead in H2 in general (even in the 200patched version). We know that use of auto-commit is costly and maybe there are other aspects of transaction processing/commit/rollback which are costly but not needed for our case. We are implementing a single-user effectively private table and when it is in NO-UNDO mode it should not need any transaction processing at all, but in fact it does all the normal transaction processing to commit changes as they are made. That makes no sense to me. Can we bypass this or neuter it in some way to eliminate the associated processing?

Finally, I think we need to do the following:

- Make the single user and multi-user 4GL code tests a part of the Hotel GUI project.

- Implement a simple way to run these and capture the results.

- Document it.

I'd like for it to be easy for anyone to check the most important performance characteristics of a new H2 version. When checking a new version, we should run these first before running any other tests. The 4GL use cases are what matter.

#41 Updated by Alexandru Lungu almost 2 years ago

Greg Shah wrote:

Clearly, we cannot move ahead with the new version of H2. At least we cannot without something that changes the performance profile for our use cases. It is probably worth investigating the source(s) of the difference. If there is something we can do to make the performance at least as good, then we could move to the later version and have more future options. Being stuck at the old unsupported version is not a good long term plan.

I think it is clear that the engine difference is the one that really downgrades the performance. MVStores looks like an engine which is rather optimized for many concurrent users rather than for single-user heavy-lifting. We can try to optimize MVStore to fit our single-user use-case, but I expect pretty intrusive changes to suppress the extra synchronization we don't use.

I'm also wondering about the transaction processing overhead in H2 in general (even in the 200patched version). We know that use of auto-commit is costly and maybe there are other aspects of transaction processing/commit/rollback which are costly but not needed for our case. We are implementing a single-user effectively private table and when it is in NO-UNDO mode it should not need any transaction processing at all, but in fact it does all the normal transaction processing to commit changes as they are made. That makes no sense to me. Can we bypass this or neuter it in some way to eliminate the associated processing?

This is something I also started to think of after we integrated private databases. I agree that COMMIT and transaction processing in general may be an overhead when we use private database with NO-UNDO. I wonder if there is any case in which a physical 4GL user can have multiple transactions to one single temporary table ... maybe there is none.

- H2 defaults

READ_COMMITTED. - H2 allows

READ_UNCOMMITTEDwhich may be used in our "no-undo private database" scenario. - H2 doesn't have a

NO_TRANSACTIONisolation level.

We can test READ_UNCOMMITTED for now, but we really should find a way to eliminate the transaction support and synchronization for good (in the NO-UNDO private database case).

I will also like to mention the fact that H2 is automatically updating its meta tables (in a synchronized way) after each DDL operation. AFAIK, this is not quite helpful for FWD, as our persistence layer keeps track of the metadata anyways (in Java metadata models or in 4GL converted meta tables). There are only some parts in FWD where INFORMATION_SCHEMA is used to interact with sequences. Even if the meta update is done only for non-temporary objects in H2, the synchronization is done for temporary objects as well. I think we can easily patch H2 to disable meta tables (INFORMATION_SCHEMA).

#42 Updated by Greg Shah almost 2 years ago

I wonder if there is any case in which a physical 4GL user can have multiple transactions to one single temporary table ... maybe there is none.

If the scope of a temp-table is broader than the scope of a full transaction, it is possible for there to be more than one full transaction during the lifetime of a temp-table.

This only would affect undoable temp-tables and only while a transaction is active.

#43 Updated by Greg Shah almost 2 years ago

Is the H2 storage engine API implemented in a clean manner or is the storage engine implemented as pieces of code spread throughout the project?

What about the transaction processing semantics? Is it inside the storage engine or is it in the H2 code that calls the storage engine?

#44 Updated by Dănuț Filimon almost 2 years ago

I am currently working on integrating a 4GL code test into Hotel GUI project. The test consists of simple operations using indexes, sort or none of those (similar to the tests in #6679-37). I am still working on the UI because it needs to also show the testing parameters (number of records in the tables, number of statements executed), which I omitted. It won't take long before I add it to the Hotel GUI application.

Greg wrote:

Is the H2 storage engine API implemented in a clean manner or is the storage engine implemented as pieces of code spread throughout the project?

The store engine doesn't have a clean implementation, there is no proper interface for it.

What about the transaction processing semantics? Is it inside the storage engine or is it in the H2 code that calls the storage engine?

The processing semantics for transactions are handled in the mvstore package and not in the MVStore class. The MVStore is stored as a parameter in a final class called Store, which is used often to check if the MVStore is open or not before moving forward. The Store class also holds a TransactionStore which is used to start and register a transaction. Besides MVStore, there is MVMap which is used when executing all statements (all read and write operations) and is part of the MVStore. I think it is hard to stop using MVStore since the application depends on the resources that the MVStore has.

#45 Updated by Dănuț Filimon over 1 year ago

Committed hotel_gui/rev.252. I added the H2 performance tests to the Hotel GUI application.

Added a window with a browse which displays results from 4 types of operations (create, update, find, delete). I wrote a comment in each main test file that contains an example in order to easily expand the file with additional tests. The results obtained can be exported into a .csv file (after exporting the results, you can find a file "performance.csv" in the deploy/client folder). H2 testing window can be accessed by pressing "CTRL-ALT-H" anywhere in the application.

I found a few differences in behavior between 4GL and the FWD application:- If the H2 test window is opened before logging into the hotel app, it is impossible to log in or exit the app right after.

- If you login in the application and then open the H2 window, when logging out, the login window will not appear right after (Pressing ESC once will show an error, and pressing it again will display the login window).

- The following command combination spends a considerable amount of time to execute: FIND -> DELETE -> FIND, the time spent to "process" the second find command is considerably long after deleting all the temp-table entries (The temp-tables are empty, so the find command should finish faster). I will look up into this and see what is going on here, it may be related to the caching that FWD does.

#46 Updated by Eric Faulhaber 5 months ago

We have long since decided not to move ahead with MVStore, and we have made a significant investment in improving H2 using PageStore. Can this issue be closed? If not:

- what is left to do that is not already covered by other, more specific tasks; and

- what is the current % done?

#47 Updated by Alexandru Lungu 5 months ago

- Status changed from WIP to Internal Test

- % Done changed from 0 to 100

This task didn't have quite a well-define purpose. It was mostly "do your best improving H2". I can't appreciate a proper %, but it is definitely not 0.

There are no loose-ends in this task, so we can close it and work on specific items separately.